7:00 – 8:00, 4:00 – 5:00 Research

- Finished the first pass at the poster in LaTex. I dunno. I might try Illustrator and make something pretty(er).

- Built the latests paper and poster and sent to Don for this afternoon’s meeting

- Facebook is rolling out its new tool against fake news.

- FiveThirtyEight’s take on r/TheDonald

8:30 – 10:30, 12:30 – 3:30 BRC

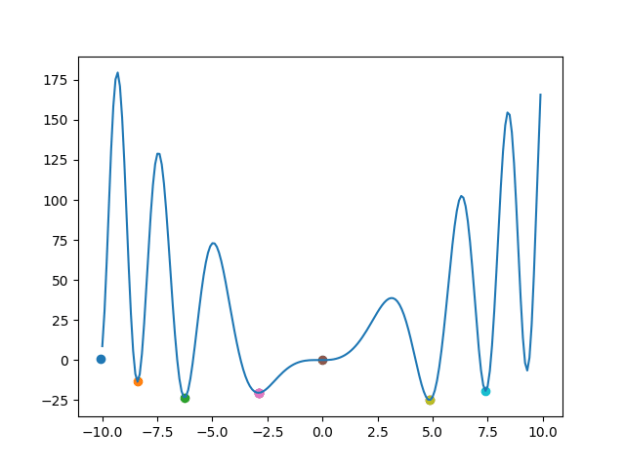

- I don’t think my plots are right. Going to add some points to verify…

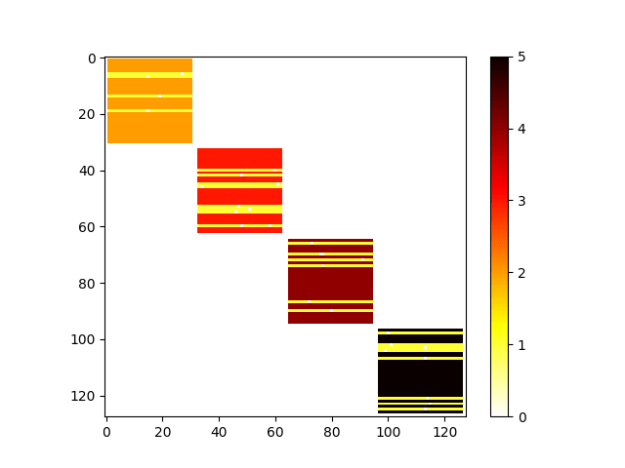

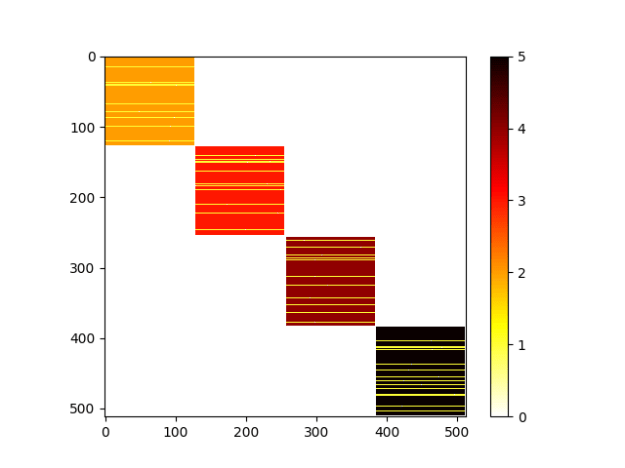

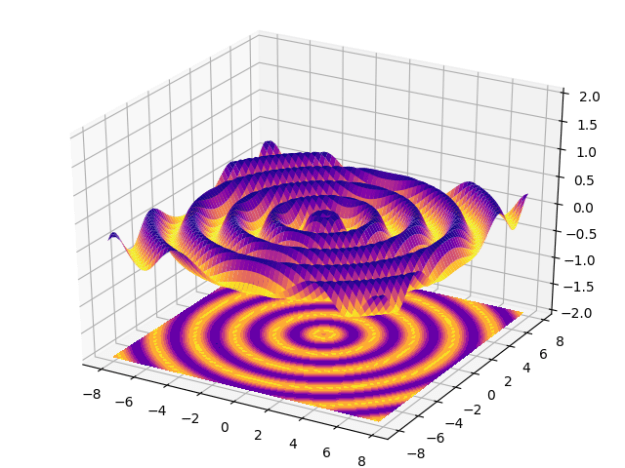

- First, build a matrix of all the values. Then we can visualize as a surface, and look for the best values after calculation

- Okay………. So there is a very weird bug that Aaron stumbled across in running python scripts from the command line. There are many, many, many thoughts on this, and it comes from a legacy issue between py2 and py3 apparently. So, much flailing:

python -i -m OptimizedClustererPackage.DBSCAN_clusterer.py python -m OptimizedClustererPackage\DBSCAN_clusterer.py C:\Development\Sandboxes\TensorflowPlayground\OptimizedClustererPackage>C:\Users\philip.feldman\AppData\Local\Programs\Python\Python35\python.exe -m C:\Development\Sandboxes\TensorflowPlayground\OptimizedClustererPackage\DBSCAN_clusterer.py

…etc…etc…etc…

- After I’d had enough of this, I realized that the IDE is running all of this just fine, so something works. So, following this link, I set the run config to “Show command line afterwards”:

The outputs are very helpful:

The outputs are very helpful:

C:\Users\philip.feldman\AppData\Local\Programs\Python\Python35\python.exe C:\Users\philip.feldman\.IntelliJIdea2017.1\config\plugins\python\helpers\pydev\pydev_run_in_console.py 60741 60742 C:/Development/Sandboxes/TensorflowPlayground/OptimizedClustererPackage/cluster_optimizer.py

- Editing out the middle part, we get

C:\Users\philip.feldman\AppData\Local\Programs\Python\Python35\python.exe C:/Development/Sandboxes/TensorflowPlayground/OptimizedClustererPackage/cluster_optimizer.py

And that worked! Note the backslashes on the executable and the forward slashes on the argument path.

- Update #1. Aaron’s machine was not able to run a previous version of the code, so we poked at the issues, and I discovered that I had left some code in my imports that was not in his code. It’s the Solution #4: Use absolute imports and some boilerplate code“section from this StackOverflow post. Specifically, before importing the local files, the following four lines of code need to be added:

import sys # if you haven't already done so from pathlib import Path # if you haven't already done so root = str(Path(__file__).resolve().parents[1]) sys.path.append(root)

- After which, you can add your absolute imports as I do in the next two lines:

from OptimizedClustererPackage.protobuf_reader import ProtobufReader from OptimizedClustererPackage.DBSCAN_clusterer import DBSCANClusterer

- And that seems to really, really, really work (so far).

note that there are at least three pdfs, though the overall best overall value doesn’t change

note that there are at least three pdfs, though the overall best overall value doesn’t change

You must be logged in to post a comment.