TF Dev Sumit

Highlights blog post from the TF product manager

Keynote

- Connecterra tracking cows

- Google is an AI – first company. All products are being influenced. TF is the dogfood that everyone is eating at google.

Rajat Monga

- Last year has been focussed on making TF easy to use

- 11 million downloads

- blog.tensorflow.org

- youtube.com/tensorflow

- tensorflow.org/ub

- tf.keras – full implementation.

- Premade estimators

- three line training from reading to model? What data formats?

- Swift and tensorflow.js

Megan

- Real-world data and time-to-accuracy

- Fast version is the pretty version

- TensorflowLite is 300% speedup in inference? Just on mobile(?)

- Training speedup is about 300% – 400% anually

- Cloud TPUs are available in V2. 180 TF computation

- github.com/tensorflow/tpu

- ResNet-50 on Cloud TPU in < 15

Jeff Dean

- Grand Engineering challenges as a list of ML goals

- Engineer the tools for scientific discovery

- AutoML – Hyperparameter tuning

- Less expertise (What about data cleaning?)

- Neural architecture search

- Cloud Automl for computer vision (for now – more later)

- Retinal data is being improved as the data labeling improves. The trained human trains the system proportionally

- Completely new, novel scientific discoveries – machine scan explore horizons in different ways from humans

- Single shot detector

Derrek Murray @mrry (tf.data)

- Core TF team

- tf.data –

- Fast, Flexible, and Easy to use

- ETL for TF

- tensorflow.org/performance/datasets_performance

- Dataset tf.SparseTensor

- Dataset.from_generator – generates graphs from numpy arrays

- for batch in dataset: train_model(batch)

- 1.8 will read in CSV

- tf.contrib.data.make_batched_features_dataset

- tf.contrib.data.make_csv_dataset()

- Figures out types from column names

Alexandre Passos (Eager Execution)

- Eager Execution

- Automatic differentiation

- Differentiation of graphs and code <- what does this mean?

- Quick iterations without building graphs

- Deep inspection of running models

- Dynamic models with complex control flows

- tf.enable_eager_execution()

- immediately run the tf code that can then be conditional

- w = tfe.variables([[1.0]])

- tape to record actions, so it’s possible to evaluate a variety of approaches as functions

- eager supports debugging!!!

- And profilable…

- Google collaboratory for Jupyter

- Customizing gradient, clipping to keep from exploding, etc

- tf variables are just python objects.

- tfe.metrics

- Object oriented savings of TF models Kind of like pickle, in that associated variables are saved as well

- Supports component reuse?

- Single GPU is competitive in speed

- Interacting with graphs: Call into graphs Also call into eager from a graph

- Use tf.keras.layers, tf.keras.Model, tf.contribs.summary, tfe.metrics, and object-based saving

- Recursive RNNs work well in this

- Live demo goo.gl/eRpP8j

- getting started guide tensorflow.org/programmers_guide/eager

- example models goo.gl/RTHJa5

Daniel Smilkov (@dsmilkov) Nikhl Thorat (@nsthorat)

- In-Browser ML (No drivers, no installs)

- Interactive

- Browsers have access to sensors

- Data stays on the client (preprocessing stage)

- Allows inference and training entirely in the browser

- Tensorflow.js

- Author models directly in the browser

- import pre-trained models for inference

- re-train imported models (with private data)

- Layers API, (Eager) Ops API

- Can port keras or TF morel

- Can continue to train a model that is downloaded from the website

- This is really nice for accessibility

- js.tensorflow.org

- github.com/tensorflow/tfjs

- Mailing list: goo.gl/drqpT5

Brennen Saeta

- Performance optimization

- Need to be able to increase performance exponentially to be able to train better

- tf.data is the way to load data

- Tensorboard profiling tools

- Trace viewer within Tensorboard

- Map functions seem to take a long time?

- dataset.map(Parser_fn, num_parallel_calls = 64)) <- multithreading

- Software pipelining

- Distributed datasets are becoming critical. They will not fit on a single instance

- Accelerators work in a variety of ways, so optimizing is hardware dependent For example, lower precision can be much faster

- bfloat16 brain floating point format. Better for vanishing and exploding gradients

- Systolic processors load the hardware matrix while it’s multiplying, since you start at the upper left corner…

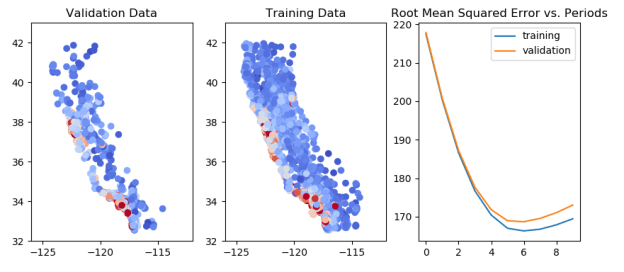

- Hardware is becoming harder and harder to do apples-to apples. You need to measure end-to-end on your own workloads. As a proxy, Stanford’s DAWNBench

- Two frameworks XLA nd Graph

Mustafa Ispir (tf.estimator, high level modules for experiments and scaling)

- estimators fill in the model, based on Google experiences

- define as an ml problem

- pre made estimators

- reasonable defaults

- feature columns – bucketing, embedding, etc

- estimator = model_to_estimator

- image = hum.image_embedding_column(…)

- supports scaling

- export to production

- estimator.export_savemodel()

- Feature columns (from csv, etc) intro, goo.gl/nMEPBy

- Estimators documentation, custom estimators

- Wide-n-deep (goo.gl/l1cL3N from 2017)

- Estimators and Keras (goo.gl/ito9LE Effective TensorFlow for Non-Experts)

Igor Sapirkin

- distributed tensorflow

- estimator is TFs highest level of abstraction in the API google recommends using the highest level of abstraction you can be effective in

- Justine debugging with Tensorflow Debugger

- plugins are how you add features

- embedding projector with interactive label editing

Sarah Sirajuddin, Andrew Selle (TensorFlow Lite) On-device ML

- TF Lite interpreter is only 75 kilobytes!

- Would be useful as a biometric anonymizer for trustworthy anonymous citizen journalism. Maybe even adversarial recognition

- Introduction to TensorFlow Lite → https://goo.gl/8GsJVL

- Take a look at this article “Using TensorFlow Lite on Android” → https://goo.gl/J1ZDqm

Vijay Vasudevan AutoML @spezzer

- Theory lags practice in valuable discipline

- Iteration using human input

- Design your code to be tunable at all levels

- Submit your idea to an idea bank

Ian Langmore

- Nuclear Fusion

- TF for math, not ML

Cory McLain

- Genomics

- Would this be useful for genetic algorithms as well?

Ed Wilder-James

- Open source TF community

- Developers mailing list developers@tensorflow.org

- tensorflow.org/community

- SIGs SIGBuild, other coming up

- SIG Tensorboard <- this

Chris Lattner

- Improved usability of TF

- 2 approaches, Graph and Eager

- Compiler analysis?

- Swift language support as a better option than Python?

- Richard Wei

- Did not actually see the compilation process with error messages?

TensorFlow Hub Andrew Gasparovic and Jeremiah Harmsen

- Version control for ML

- Reusable module within the hub. Less than a model, but shareable

- Retrainable and backpropagateable

- Re-use the architecture and trained weights (And save, many, many, many hours in training)

- tensorflow.org/hub

- module = hub.Module(…., trainable = true)

- Pretrained and ready to use for classification

- Packages the graph and the data

- Universal Sentence Encodings semantic similarity, etc. Very little training data

- Lower the learning rate so that you don’t ruin the existing rates

- tfhub.dev

- modules are immutable

- Colab notebooks

- use #tfhub when modules are completed

- Try out the end-to-end example on GitHub → https://goo.gl/4DBvX7

TF Extensions Clemens Mewald and Raz Mathias

- TFX is developed to support lifecycle from data gathering to production

- Transform: Develop training model and serving model during development

- Model takes a raw data model as the request. The transform is being done in the graph

- RESTful API

- Model Analysis:

- ml-fairness.com – ROC curve for every group of users

- github.com/tensorflow/transform

Project Magenta (Sherol Chen)

People:

- Suharsh Sivakumar – Google

- Billy Lamberta (documentation?) Google

- Ashay Agrawal Google

- Rajesh Anantharaman Cray

- Amanda Casari Concur Labs

- Gary Engler Elemental Path

- Keith J Bennett (bennett@bennettresearchtech.com – ask about rover decision transcripts)

- Sandeep N. Gupta (sandeepngupta@google.com – ask about integration of latent variables into TF usage as a way of understanding the space better)

- Charlie Costello (charlie.costello@cloudminds.com – human robot interaction communities)

- Kevin A. Shaw (kevin@algoint.com data from elderly to infer condition)

You must be logged in to post a comment.