7:00 – 4:00 ASRC MKT

- More BIC

- One of the things that MB seems to be saying here is that group identification has two parts. First is the self-identification with the group. Second is the mechanism that supports that framing. You can’t belong to a group you don’t see.

- To generalize the notions of team mechanism and team to unreliable contexts, we need the idea of the profile that gets enacted if all the agents function under a mechanism. Call this the protocol delivered by the mechanism. The protocol is , roughly, what everyone is supposed to do, what everyone does if the mechanism functions without any failure. But because there may well be failures, the protocol of a mechanism may not get enacted, some agents not playing their part but doing their default actions instead. For this reason the best protocol to have is not in general the first-best profile o*. In judging mechanisms we must take account of the states of the world in which there are failures, with their associated probabilities. How? Put it this way: if we are choosing a mechanism, we want one that delivers the protocol that maximizes the expected value of U. (pg 131)

- Group identification is a framing phenomenon. Among the many different dimensions of the frame of a decision-maker is the ‘unit of agency’ dimension: the framing agent may think of herself as an individual doer or as part of some collective doer. The first type of frame is operative in ordinary game-theoretic, individualistic reasoning, and the second in team reasoning. The concept-clusters of these two basic framings center round ‘I/ she/he’ concepts and ‘we’ concepts respectively. Players in the two types of frame begin their reasoning with the two basic conceptualizations of the situation, as a ‘What shall I do?’ problem, and a ‘What shall we do?’ problem, respectively. (pg 137)

- Analyzing the Digital Traces of Political Manipulation: The 2016 Russian Interference Twitter Campaign

- Until recently, social media was seen to promote democratic discourse on social and political issues. However, this powerful communication platform has come under scrutiny for allowing hostile actors to exploit online discussions in an attempt to manipulate public opinion. A case in point is the ongoing U.S. Congress investigation of Russian interference in the 2016 U.S. election campaign, with Russia accused of, among other things, using trolls (malicious accounts created for the purpose of manipulation) and bots (automated accounts) to spread misinformation and politically biased information. In this study, we explore the effects of this manipulation campaign, taking a closer look at users who re-shared the posts produced on Twitter by the Russian troll accounts publicly disclosed by U.S. Congress investigation. We collected a dataset with over 43 million elections-related posts shared on Twitter between September 16 and October 21, 2016 by about 5.7 million distinct users. This dataset included accounts associated with the identified Russian trolls. We use label propagation to infer the ideology of all users based on the news sources they shared. This method enables us to classify a large number of users as liberal or conservative with precision and recall above 90%. Conservatives retweeted Russian trolls about 31 times more often than liberals and produced 36 times more tweets. Additionally, most retweets of troll content originated from two Southern states: Tennessee and Texas. Using state-of-the-art bot detection techniques, we estimated that about 4.9% and 6.2% of liberal and conservative users respectively were bots. Text analysis on the content shared by trolls reveals that they had a mostly conservative, pro-Trump agenda. Although an ideologically broad swath of Twitter users were exposed to Russian Trolls in the period leading up to the 2016 U.S. Presidential election, it was mainly conservatives who helped amplify their message.

- CHIIR Talk

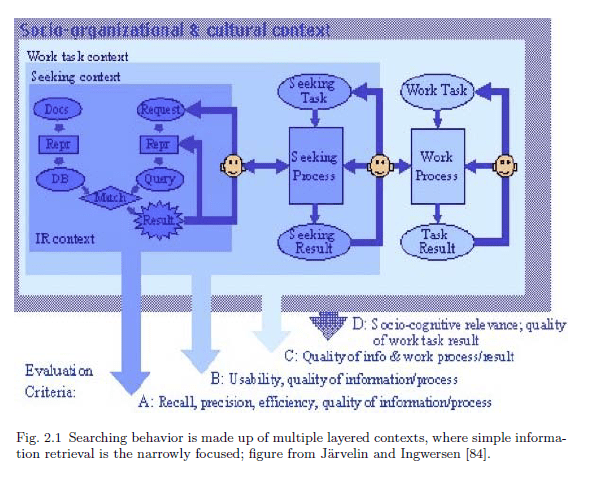

- Make new IR-Context graphic – done!

- De-uglify JuryRoom – done!

- TensorFlow’s Machine Learning Crash Course

(page 102)

(page 102) (pg 107)

(pg 107) (pg 107)

(pg 107)

You must be logged in to post a comment.