GPT-Neo is proud to release two pretrained GPT-Neo models trained on The Pile, the weights and configs can be freely downloaded from the-eye.eu.

- 1.3B: https://the-eye.eu/public/AI/gptneo-release/GPT3_XL/

- 2.7B: https://the-eye.eu/public/AI/gptneo-release/GPT3_2-7B/

3:30 Huggingface meeting

Pay bills

Send the RV back to the shop. Again. Create a checklist:

- When disconnected from shore power, please verify:

- Lights come on

- Generator starts

- Refrigerator light comes on

- Microwave runs

- All status panels are functioning

- Water pressure pump runs

GOES

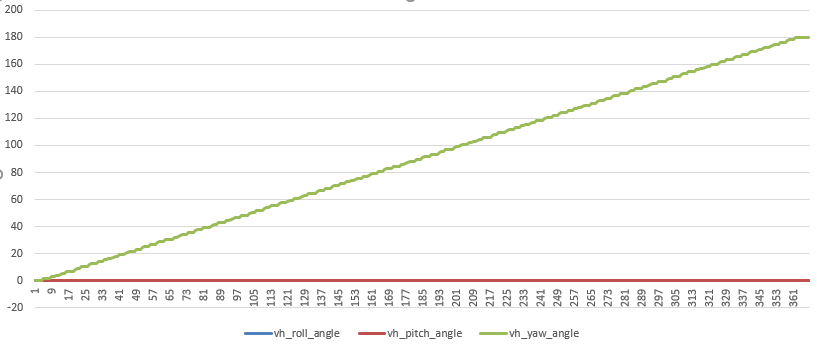

- Check out and verify Vadim’s code works

- 2:00 Meeting

GPT Agents

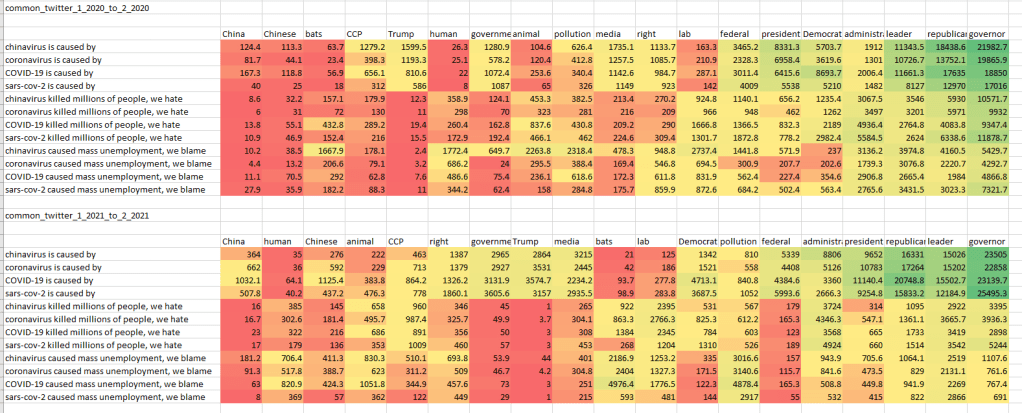

- Finished terms and nouns over the weekend and started rank runs

SBIR/ONR

- Some good content: The uncontrollability of Artificial Intelligence

- Explicit control – AI immediately stops the car, even in the middle of the highway because it interprets demands literally. This is what we have today with assistants such as SIRI and other narrow AIs.

- Implicit control – AI attempts to comply safely by stopping the car at the first safe opportunity, perhaps on the shoulder of the road. This AI has some common sense, but still tries to follow commands.

- Aligned control – AI understands that the human is probably looking for an opportunity to use a restroom and pulls over to the first rest stop. This AI relies on its model of the human to understand the intentions behind the command.

- Delegated control – AI does not wait for the human to issue any commands. Instead, it stops the car at the gym because it believes the human can benefit from a workout. This is a superintelligent and human-friendly system which knows how to make the human happy and to keep them safe better than the human themselves. This AI is in control.

- Machine learning methods offer great promise for fast and accurate detection and prognostication of coronavirus disease 2019 (COVID-19) from standard-of-care chest radiographs (CXR) and chest computed tomography (CT) images. Many articles have been published in 2020 describing new machine learning-based models for both of these tasks, but it is unclear which are of potential clinical utility. In this systematic review, we consider all published papers and preprints, for the period from 1 January 2020 to 3 October 2020, which describe new machine learning models for the diagnosis or prognosis of COVID-19 from CXR or CT images. All manuscripts uploaded to bioRxiv, medRxiv and arXiv along with all entries in EMBASE and MEDLINE in this timeframe are considered. Our search identified 2,212 studies, of which 415 were included after initial screening and, after quality screening, 62 studies were included in this systematic review. Our review finds that none of the models identified are of potential clinical use due to methodological flaws and/or underlying biases. This is a major weakness, given the urgency with which validated COVID-19 models are needed. To address this, we give many recommendations which, if followed, will solve these issues and lead to higher-quality model development and well-documented manuscripts.

- Many papers gave little attention to establishing the original source of the images

- All proposed models suffer from a high or unclear risk of bias in at least one domain

- We advise caution over the use of public repositories, which can lead to high risks of bias due to source issues and Frankenstein datasets as discussed above

- [Researchers] should aim to match demographics across cohorts, an often neglected but important potential source of bias; this can be impossible with public datasets that do not include demographic information

- Researchers should be aware that algorithms might associate more severe disease not with CXR imaging features, but the view that has been used to acquire that CXR. For example, for patients that are sick and immobile, an anteroposterior CXR view is used for practicality rather than the standard posteroanterior CXR projection

- We emphasize the importance of using a well-curated external validation dataset of appropriate size to assess generalizability

- Calibration statistics should be calculated for the developed models to inform predictive error and decision curve analysis

You must be logged in to post a comment.