You can really tell that the days are getting shorter

Efficient Training of Language Models to Fill in the Middle (This is basically the reverse GPT concept)

- We show that autoregressive language models can learn to infill text after we apply a straightforward transformation to the dataset, which simply moves a span of text from the middle of a document to its end. While this data augmentation has garnered much interest in recent years, we provide extensive evidence that training models with a large fraction of data transformed in this way does not harm the original left-to-right generative capability, as measured by perplexity and sampling evaluations across a wide range of scales. Given the usefulness, simplicity, and efficiency of training models to fill-in-the-middle (FIM), we suggest that future autoregressive language models be trained with FIM by default. To this end, we run a series of ablations on key hyperparameters, such as the data transformation frequency, the structure of the transformation, and the method of selecting the infill span. We use these ablations to prescribe strong default settings and best practices to train FIM models. We have released our best infilling model trained with best practices in our API, and release our infilling benchmarks to aid future research.

Patching open-vocabulary models by interpolating weights

- Open-vocabulary models like CLIP achieve high accuracy across many image classification tasks. However, there are still settings where their zero-shot performance is far from optimal. We study model patching, where the goal is to improve accuracy on specific tasks without degrading accuracy on tasks where performance is already adequate. Towards this goal, we introduce PAINT, a patching method that uses interpolations between the weights of a model before fine-tuning and the weights after fine-tuning on a task to be patched. On nine tasks where zero-shot CLIP performs poorly, PAINT increases accuracy by 15 to 60 percentage points while preserving accuracy on ImageNet within one percentage point of the zero-shot model. PAINT also allows a single model to be patched on multiple tasks and improves with model scale. Furthermore, we identify cases of broad transfer, where patching on one task increases accuracy on other tasks even when the tasks have disjoint classes. Finally, we investigate applications beyond common benchmarks such as counting or reducing the impact of typographic attacks on CLIP. Our findings demonstrate that it is possible to expand the set of tasks on which open-vocabulary models achieve high accuracy without re-training them from scratch.

Alex Jones and the Lie Economy

- Discerning audiences who stumble on Jones’ show turn him off, but his message excites the credulous who, if they don’t fully subscribe to the man’s views, want to hear more of the same. Lies are almost always more exciting and exploitable than dull truths. Having culled the impressionable from the doubting and boosted their pulse rate, he turns them over to his merchandising wing where he sells survivalist gear and health supplements like Brain Force Ultra, Winter Sun Plus Vitamin D and a variety of “Superblue Silver” products (immune gargle, toothpaste and wound dressing) that Jones claimed could mitigate Covid. It’s not incidental that the products he hawks are presented as the fix for coming apocalyptic perils predicted on his shows. Citing court filings submitted by Jones’ attorneys in discovery, HuffPost reports that InfoWars collected $165 million in sales of these products from September 2015 to the end of 2018.

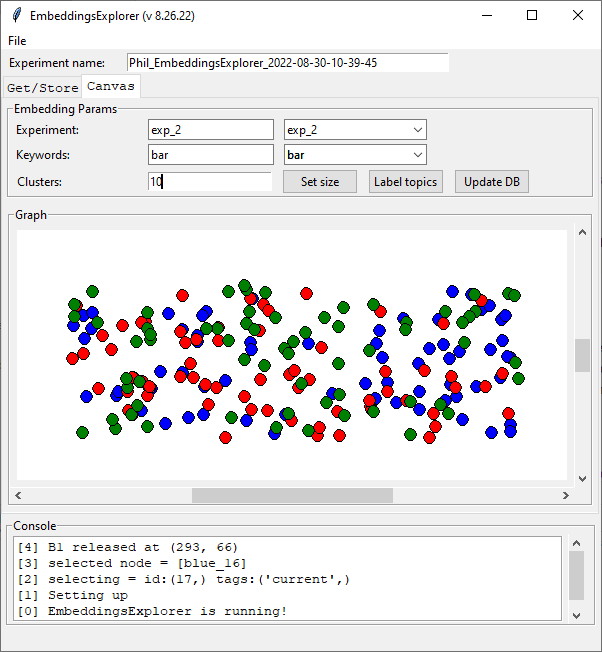

GPT-Agents

- Continue Chirp submission

- See if topic2vec works, and if it can tell the difference between ivermectin and paxlovid posts

- 3:30 Meeting

- IUI 2023

SBIRs

- 8:30 SEG staffing changes

- 9:00 Sprint planning

- Chirp

- MORS

- Quarterly Report

- RCSNN/3D graphics

Book

- University of Toronto press?

- Started on the Strategy and Tactics in Online Conflict proposal

You must be logged in to post a comment.