7:00- 4:30 ASRC PhD

- Make integer generator by scaling and shifting the floating point generator to the desired values and then truncating. It would be fun to read in a token list and have the waveform be words

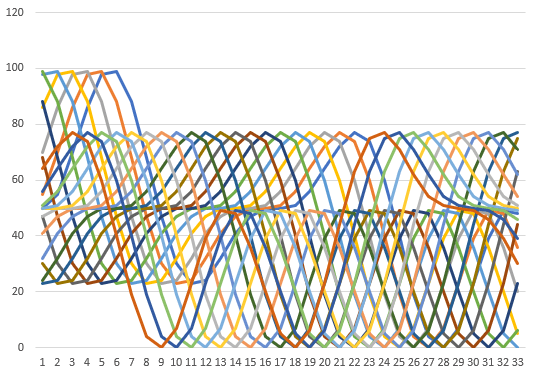

- Done with the int waveform. This is an integer waveform of the function

math.sin(xx)*math.sin(xx/2.0)*math.cos(xx/4.0)

set on a range from 0 – 100:

-

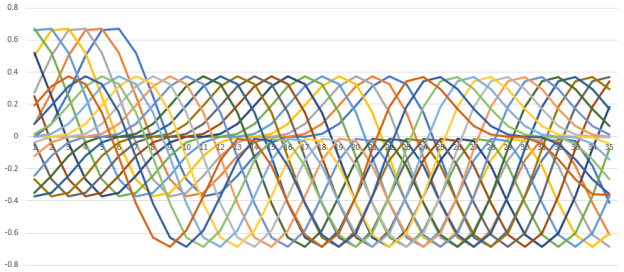

- And here’s the unmodified floating-point version of the same function:

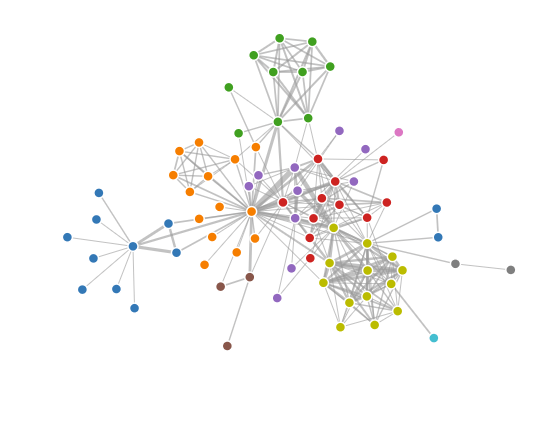

- Here’s the same function as words:

#confg: {"function":math.sin(xx)*math.sin(xx/2.0)*math.cos(xx/4.0), "rows":100, "sequence_length":20, "step":1, "delta":0.4, "type":"floating_point"} routed, traps, thrashing, fifteen, ultimately, dealt, anyway, apprehensions, boats, job, descended, tongue, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, traps, thrashing, fifteen, ultimately, dealt, anyway, apprehensions, boats, job, descended, tongue, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, thrashing, fifteen, ultimately, dealt, anyway, apprehensions, boats, job, descended, tongue, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, fifteen, ultimately, dealt, anyway, apprehensions, boats, job, descended, tongue, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, ultimately, dealt, anyway, apprehensions, boats, job, descended, tongue, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, dealt, anyway, apprehensions, boats, job, descended, tongue, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, anyway, apprehensions, boats, job, descended, tongue, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', apprehensions, boats, job, descended, tongue, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, boats, job, descended, tongue, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, job, descended, tongue, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, tongue, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, tongue, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, assortment, dripping, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, assortment, flashed, adoration, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, assortment, flashed, reputation, boats, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, assortment, flashed, reputation, guarded, routed, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, assortment, flashed, reputation, guarded, tempers, routed, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, assortment, flashed, reputation, guarded, tempers, partnership, strokes, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, assortment, flashed, reputation, guarded, tempers, partnership, bare, cheerful, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, assortment, flashed, reputation, guarded, tempers, partnership, bare, count, charleses, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, assortment, flashed, reputation, guarded, tempers, partnership, bare, count, descended, travellers, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, assortment, flashed, reputation, guarded, tempers, partnership, bare, count, descended, dashed, unsuspected, malingerer, respect, aback, vair', wraith, bare, creek, descended, assortment, flashed, reputation, guarded, tempers, partnership, bare, count, descended, dashed, ears, malingerer, respect, aback, vair', wraith, bare, creek, descended, assortment, flashed, reputation, guarded, tempers, partnership, bare, count, descended, dashed, ears, q,

- Done with the int waveform. This is an integer waveform of the function

- Started LSTMs again, using this example using Alice in Wonderland

- Aaron and T in all day discussions with Kevin about NASA/NOAA. Dropped in a few times. NASA is airgapped, but you can bring code in and out. Bringing code in requires a review.

- Call the Army BAA people. We need white paper templates and a response for Dr. Palazzolo.

- Finish and submit 810 reviews tonight. Done.

- This is important for the DARPA and Army BAAs: The geographic embedding of online echo chambers: Evidence from the Brexit campaign

- This study explores the geographic dependencies of echo-chamber communication on Twitter during the Brexit campaign. We review the evidence positing that online interactions lead to filter bubbles to test whether echo chambers are restricted to online patterns of interaction or are associated with physical, in-person interaction. We identify the location of users, estimate their partisan affiliation, and finally calculate the distance between sender and receiver of @-mentions and retweets. We show that polarized online echo-chambers map onto geographically situated social networks. More specifically, our results reveal that echo chambers in the Leave campaign are associated with geographic proximity and that the reverse relationship holds true for the Remain campaign. The study concludes with a discussion of primary and secondary effects arising from the interaction between existing physical ties and online interactions and argues that the collapsing of distances brought by internet technologies may foreground the role of geography within one’s social network.

- Also important:

- How to Write a Successful Level I DHAG Proposal

- The idea behind a Level I project is that it can be “high risk/high reward.” Put another way, we are looking for interesting, innovative, experimental, new ideas, even if they have a high potential to fail. It’s an opportunity to figure things out so you are better prepared to tackle a big project. Because of the relatively low dollar amount (no more than $50K), we are willing to take on more risk for an idea with lots of potential. By contrast, at the Level II and especially at the Level III, there is a much lower risk tolerance; the peer reviewers expect that you’ve already completed an earlier start-up or prototyping phase and will want you to convince them your project is ready to succeed.

- How to Write a Successful Level I DHAG Proposal

- Tracing a Meme From the Internet’s Fringe to a Republican Slogan

- This feedback loop is how #JobsNotMobs came to be. In less than two weeks, the three-word phrase expanded from corners of the right-wing internet onto some of the most prominent political stages in the country, days before the midterm elections.

- Effectiveness of gaming for communicating and teaching climate change

- Games are increasingly proposed as an innovative way to convey scientific insights on the climate-economic system to students, non-experts, and the wider public. Yet, it is not clear if games can meet such expectations. We present quantitative evidence on the effectiveness of a simulation game for communicating and teaching international climate politics. We use a sample of over 200 students from Germany playing the simulation game KEEP COOL. We combine pre- and postgame surveys on climate politics with data on individual in-game decisions. Our key findings are that gaming increases the sense of personal responsibility, the confidence in politics for climate change mitigation, and makes more optimistic about international cooperation in climate politics. Furthermore, players that do cooperate less in the game become more optimistic about international cooperation but less confident about politics. These results are relevant for the design of future games, showing that effective climate games do not require climate-friendly in-game behavior as a winning condition. We conclude that simulation games can facilitate experiential learning about the difficulties of international climate politics and thereby complement both conventional communication and teaching methods.

- This reinforces the my recent thinking that games may be a fourth, distinct form of human sociocultural communication

You must be logged in to post a comment.