Writing Essays With AI: A Guide

- First, you don’t have to incorporate AI into your writing practice. If you want to keep writing longhand, that’s both fine and preferable in certain cases. The idea of this essay is to inspire you and help you experiment—not to give you the One True Way to write.

- Second, you should know that everyone—including me—is making this up as we go. It’s a whole new frontier, so there isn’t any standard or accepted way to write with these tools. All I can share is what I’ve seen work for me and other people.

- Third, there are many ways to misuse this tool to make crap. AI is not a panacea for lack of taste or bad intentions. If you’re skilled, though, you can use it to make stuff you love.

Tasks

- Send in AI Ethics review – done

- Send in Camera Ready – done. Minor hiccup

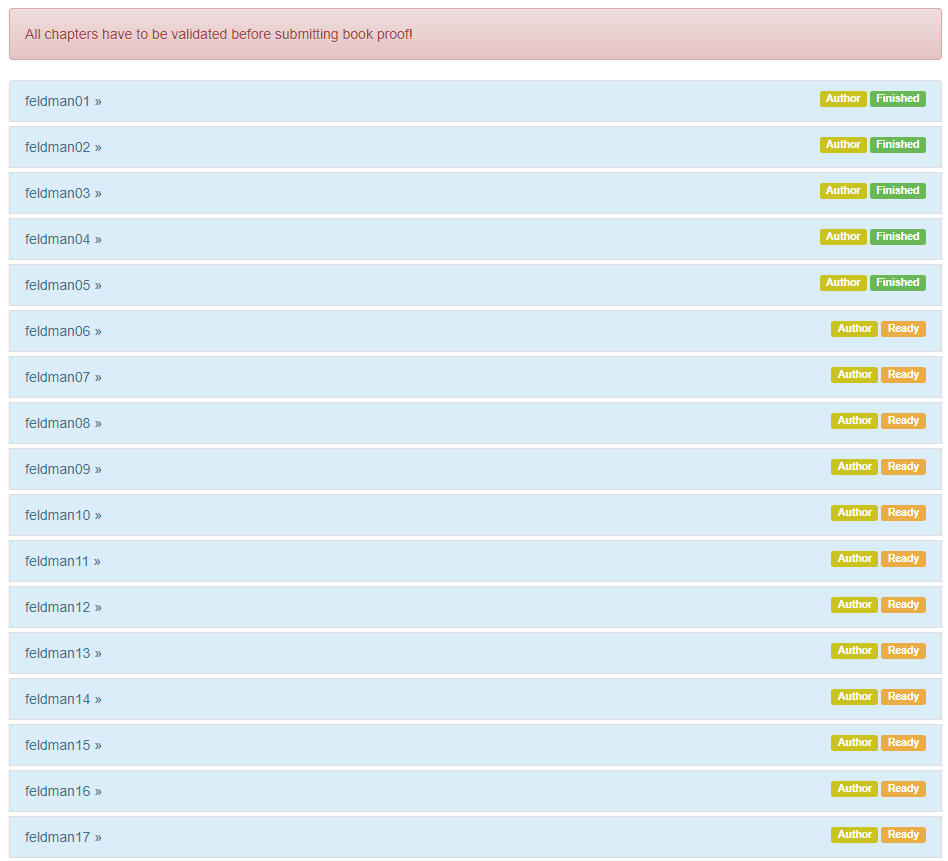

- Take care of book approvals – done? Found one problem

SBIRs

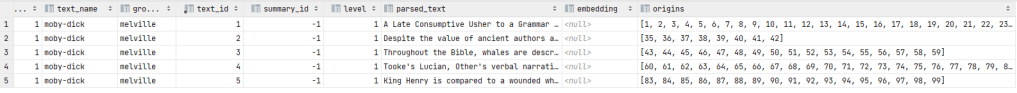

- To the summary table, add a project “source” field and an “origins” field that has a list of all rows that were used to create the summary. These will accumulate as the summaries are combined. And add a “level” field that shows the summary level easily

- Done! Everything works!

- Whoops! Not so fast, need to handle timeouts like with getting embeddings.

- I also think that all questions can be answered using the summary table. Should be faster and more useful

- 3:00 FOM meeting – Loren thinks we have some unusual data in the 25% runs. We’ll tray that as holdout

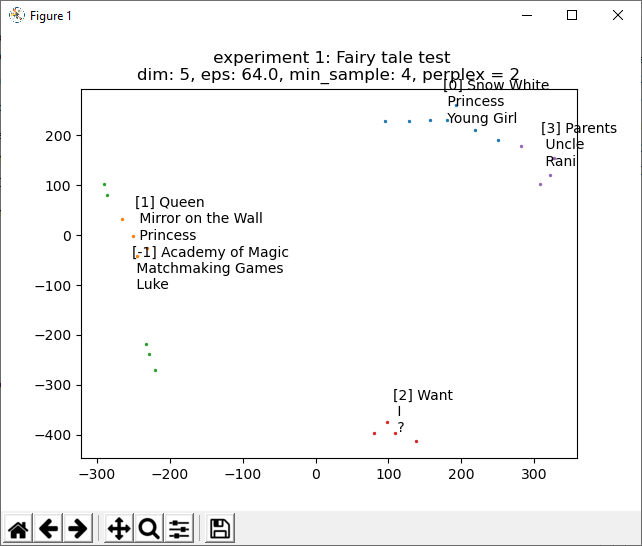

- Reworking NarrativeExplorer to use a GPT3GeneratorFrame and a GPT3EmbeddingFrame. First, it’s cleaner. Second, I should be able to re-use those components.

You must be logged in to post a comment.