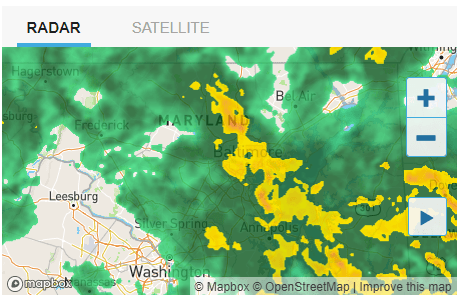

It is very wet today

Spent far too much time trying to upload a picture to the graduation site. It appears to be broken

D20

- Changed the CONTROLLED days to < 2, since things are generally looking better

ACSOS

- Sent the revised draft to Antonio

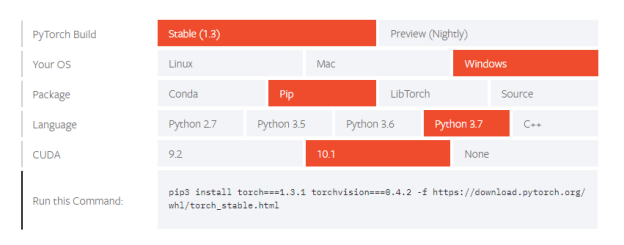

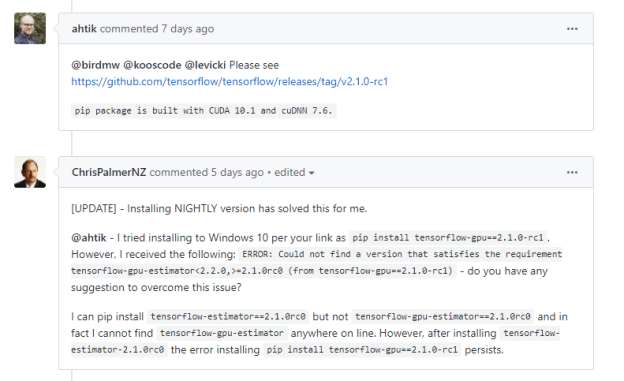

GPT-2 Agents

- Found what appears to be just what I’m looking for. Searching on GitHub for GPT-2 tensorflow led me to this project, GPT-2 Client. I’ll give that a try and see how it works. The developer, Rishabh Anand seems to have solid skills so I have some hope that this could work. I do not have the energy to start this on a Friday and then switch to GANs for the rest of the day. Sunday looks like another wet one, so maybe then.

GOES

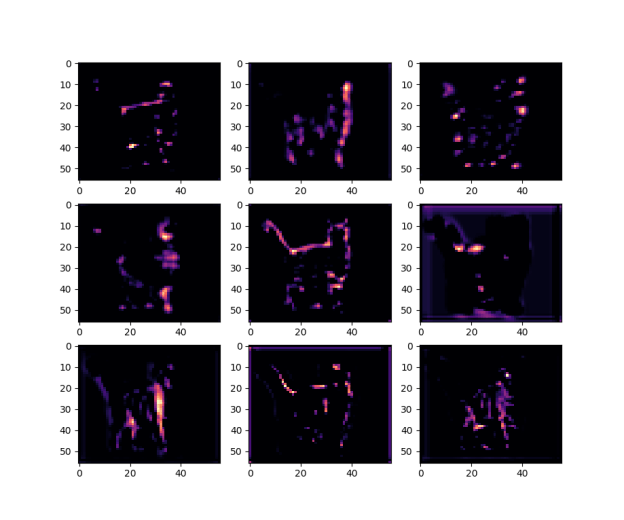

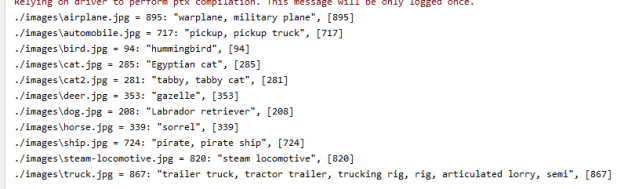

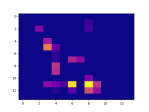

More looking at layers. This is Imagenet’s block3_conv3

More looking at layers. This is Imagenet’s block3_conv3

- Advanced CNNs

- Start GANS? Yes!

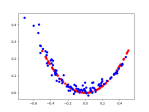

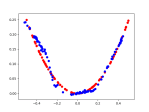

- Got this version working. Now I need to step through it. But here are some plots of it learning:

-

- I had dreams about this, so I’m going to record the thinking here:

- An MLP should be able to get from a simple simulation (square wave) to a more accurate(?) simulation sin wave. The data set is various start points and frequency queries into the DB, with matching (“real”/noisy) as the test. My intuition is that the noise will be lost, so that’s the part we’re going to have to get back with the GAN.

- So I think there is a two-step process

- Train the initial NN that will produce the generalized solution

- Use the output of the NN and the “real” data to train the GAN for fine tuning

- I had dreams about this, so I’m going to record the thinking here:

You must be logged in to post a comment.