7:00 – 7:00 VTX

1:00 – Patrick’s proposal

- Framing of problem and researcher

- Overview of the problem space

- Ready to Hand

- Extension of self

- Assistive technology abandonment

- Ease of Acquisition

- Device Performance

- Cost and Maintenance

- Stigma

- Alignment with lifestyles

- Prior Work

- Technology Use

- Methods Overview

- Formative User Needs

- Design Focus Groups

- Design Evaluation and Configuration Interviews

- Summary of Findings

- Priorities

- Maintain form factor

- Different controls for different regions

- Familiarity

- Robustness to environmental changes

- Potential of the wheelchair

- Nice diagram. Shows the mapping from a chair to a smartphone

- Inputs to wheelchair-mounted devices

- Force sensitive device, new gestures and insights

- Summary (This looks like research through design. Why no mention?)

- Prototypes

- Gestures

- Demonstration

- Proposed Work

- Passive Haptic Rehabilitation

- Can it be done

- How effective

- User perception

- Study design!!!

- Physical Activity and Athletic Performance

- Completed: Accessibility of fitness trackers. (None of this actually tracks to papers in the presentation)

- Body location and sensing

- Misperception

- Semi-structured interviews

- Low experience / High interest (Lack of system trust!)

- Chairable Computing for Basketball

- Research Methods

- Observations

- Semi-structured interviews

- Prototyping

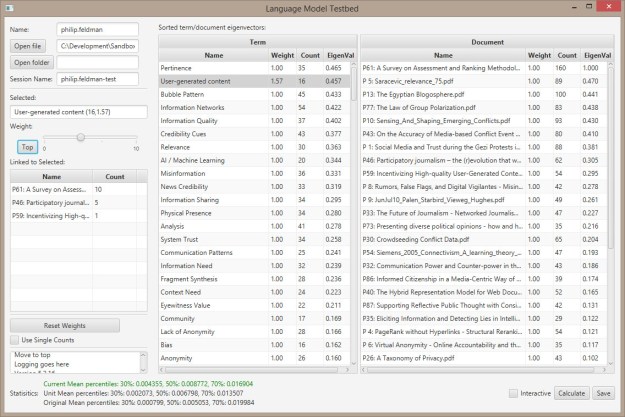

- Data presentation – how does one decide what they want from what is available?

- What is the problem – Helena

- Assistive technologies are not being designed right. We need to improve the design process.

- That’s too general – give me a citation that says that technology abandonment WRT wheelchair use has high abandonment

- Patrick responds with a bad design

- Helena – isn’t the principal user-centered design. How has the HCI community done this before WRT other areas than wheelchairs to interact with computing systems

- Helena – Embodied interaction is not a new thing, this is just a new area.Why didn’t you group your work. Is the prior analysis not embodied? Is your prior work not aligned with this perspective

- How were the design principles used o develop an refine the pressure sensors?

More Reading

- Creating Friction: Infrastructuring Civic Engagement in Everyday Life

- This is the confirming information bubble of the ‘ten blue links’: Because infrastructures reflect the standardization of practices, the social work they do is also political: “a number of significant political, ethical and social choices have without doubt been folded into its development” ([67]: 233). The further one is removed from the institutions of standardization, the more drastically one experiences the values embedded into infrastructure—a concept Bowker and Star term ‘torque’ [9]. More powerful actors are not as likely to experience torque as their values more often align with those embodied in the infrastructure. Infrastructures of civic engagement that are designed and maintained by those in power, then, tend to reflect the values and biases held by those in power.

- Meeting with Wayne. My hypothesis and research questions are backwards but otherwise good.

You must be logged in to post a comment.