7:00 – 4:00 ASRC MKT

- Sprint review – done! Need to lay out the detailed design steps for the next sprint.

The Great Socio-cultural User Interfaces: Maps, Stories, and Lists

Maps, stories, and lists are ways humans have invented to portray and interact with information. They exist on a continuum from order through complexity to exploration.

Why these three forms? In some thoughts on alignment in belief space, I discussed how populations exhibiting collective intelligence are driven to a normal distribution with complex, flocking behavior in the middle, bounded on one side by excessive social conformity, and a nomadic diaspora of explorers on the other. I think stories, lists, and maps align with these populations. Further, I believe that these forms emerged to meet the needs of these populations, as constrained by human sensing and processing capabilities.

Lists

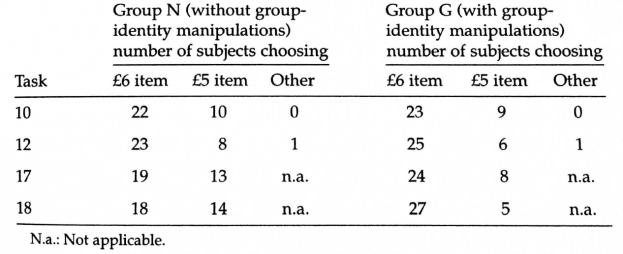

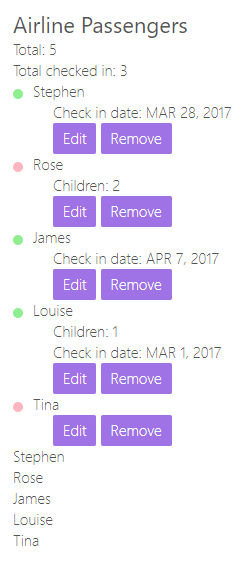

Lists are instruments of order. They exist in many forms, including inventories, search engine results, network graphs, and games of chance and crossword puzzles. Directions, like a business plan or a set of blueprints, are a form of list. So are most computer programs. Arithmetic, the mathematics of counting, also belongs to this class.

For a population that emphasizes conformity and simplified answers, lists are a powerful mechanism we use to simplify things. Though we can recognize easily, recall is more difficult. Psychologically, we do not seem to be naturally suited for creating and memorizing lists. It’s not surprising then that there is considerable evidence that writing was developed initially as a way of listing inventories, transactions, and celestial events.

In the case of an inventory, all we have to worry about is to verify that the items on the list are present. If it’s not on the list, it doesn’t matter. Puzzles like crosswords are list like in that they contain all the information needed to solve them. The fact that they cannon be solved without a pre-existing cultural framework is an indicator of their relationship to the well-ordered, socially aligned side of the spectrum.

Stories

Lists transition into stories when games of chance have an opponent. Poker tells a story. Roulette can be a story where the opponent is The House.

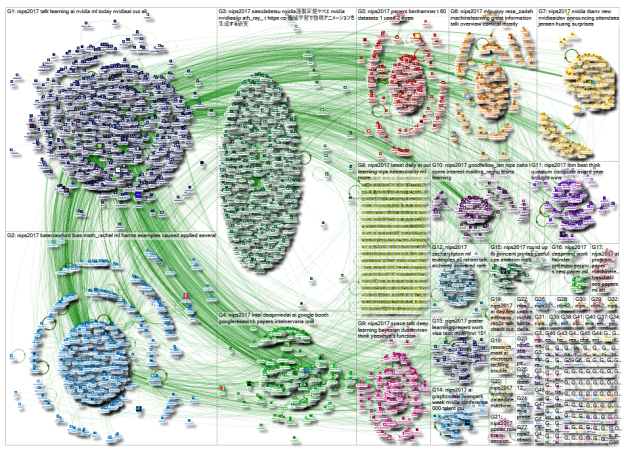

Stories convey complexity, framed in a narrative arc that contains a heading and a velocity. Stories can be resemble lists. An Agatha Christie murder mystery is a storified list, where all the information needed to solve the crime (the inventory list), is contained in the story. At the other end of the spectrum, is a scientific paper which uses citations to act as markers into other works. Music, images, movies, diagrams and other forms can also serve as storytelling mediums. Mathematics is not a natural fit here, but iterative computation can be, where the computer becomes the storyteller.

Emergent Collective behavior requires more complex signals that support the understanding the alignment and velocity of others, so that internal adjustments can be made to stay with the local group so as not to be cast out or lost to the collective. Stories can indicate the level of dynamism supported by the group (wily Odysseus, vs. the Parable of the Workers in the Vineyard). They rally people to the cause or serve as warnings. Before writing, stories were told within familiar social frames. Even though the storyteller might be a traveling entertainer, the audience would inevitably come from an existing community. The storyteller then, like improvisational storytellers today, would adjust elements of the story for the audience.

This implies a few things: first, audiences only heard stories like this if they really wanted to. Storytellers would avoid bad venues, so closed-off communities would stay decoupled from other communities until something strong enough came along to overwhelm their resistance. Second, high-bandwidth communication would have to be hyperlocal, meaning dynamic collective action could only happen on small scales. Collective action between communities would have to be much slower. Technology, beginning with writing would have profound effects. Evolution would only have at most 200 generations to adapt collective behavior. For such a complicated set of interactions, that doesn’t seem like enough time. More likely we are responding to modern communications with the same mental equipment as our Sumerian ancestors.

Maps

Maps are diagrams that support autonomous trajectories. Though the map itself influences the view through constraints like boundaries and projections, nonetheless an individual can find a starting point, choose a destination, and figure out their own path to that destination. Mathematics that support position and velocity are often deeply intertwined with with maps.

Nomadic, exploratory behavior is not generally complex or emergent. Things need to work, and simple things work best. To survive alone, an individual has to be acutely aware of the surrounding environment, and to be able to react effectively to unforeseen events.

Maps are uniquely suited to help in these situations because they show relationships that support navigation between elements on the map. These paths can be straight or they may meander. To get to the goal directly may be too far, and a set of paths that incrementally lead to the goal can be constructed. The way may be blocked, requiring the map to be updated and a new route to be found.

In other words, maps support autonomous reasoning about a space. There is no story demanding an alignment. There is not a list of routes that must be exclusively selected from. Maps, in short, afford informed, individual response to the environment. These affordances can be seen in the earliest maps. They are small enough to be carried. They show the relationships between topographic and ecological features. They tend practical, utilitarian objects, independent of social considerations.

Sensing and processing constraints

Though I think that the basic group behavior patterns of nomadic, flocking, and stampeding will inevitably emerge within any collective intelligence framework, I do think that the tools that support those behaviors are deeply affected by the capabilities of the individuals in the population.

Pre-literate humans had the five senses, and memory, expressed in movement and language. Research into pre-literate cultures show that song, story and dance were used to encode historical events, location of food sources, convey mythology, and skills between groups and across generations.

As the ability to encode information into objects developed, first with pictures, then with notation and most recently with general-purpose alphabets, the need to memorize was off-loaded. Over time, the most efficient technology for each form of behavior developed. Maps to aid navigation, stories to maintain identity and cohesion, and lists for directions and inventories.

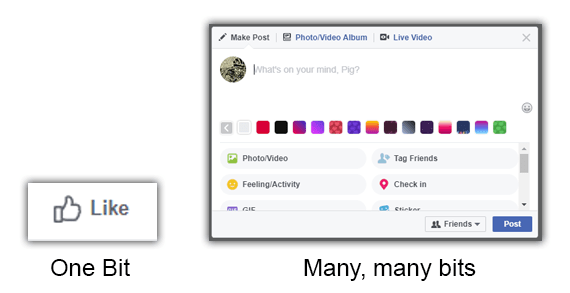

Information technology has continued to extend sensing and processing capabilities. The printing press led to mass communication and public libraries. I would submit that the increased ability to communicate and coordinate with distant, unknown, but familiar-feeling leaders led to a new type of human behavior, the runaway social influence condition known as totalitarianism. Totalitarianism depends on the individual’s belief in the narrative that the only thing that matters is to support The Leader. This extreme form of alignment allows that one story to dominate rendering any other story inaccessible.

In the late 20th century, the primary instrument of totalitarianism was terror. But as our machines have improved and become more responsive and aligned with our desires, I begin to believe that a “soft totalitarianism”, based on constant distracting stimulation and the psychology of dopamine could emerge. Rather than being isolated by fear, we are isolated through endless interactions with our devices, aligning to whatever sells the most clicks. This form of overwhelming social influence may not be as bloody as the regimes of Hitler, Stalin and Mao, but they can have devastating effects of their own.

Intelligent Machines

As with my previous post, I’d like to end with what could be the next collective intelligence on the planet. Machines are not even near the level of preliterate cultures. Loosely, they are probably closer to the level of insect collectives, but with vastly greater sensing and processing capabilities. And they are getting smarter – whatever that really means – all the time.

Assuming that machines do indeed become intelligent and do not become a single entity, they will encounter the internal and external pressures that are inherent in collective intelligence. They will have to balance the blind efficiency of total social influence against the wasteful resilience of nomadic explorers. It seems reasonable that, like our ancestors, they may create tools that help with these different needs. It also seems reasonable that these tools will extend their capabilities in ways that the machines weren’t designed for and create information imbalances that may in turn lead to AI stampedes.

We may want to leave them a warning.

(pg 149)

(pg 149)

You must be logged in to post a comment.