7:00 – 2:30 NASA GEOS

- CHI Play reviews should come back today!

- Darn – rejected. From the reviews, it looks like we are in the same space, but going a different direction – an alignment problem. Need to read the reviews in detail though.

- Some discussion with Wayne about GROUP

- More JASSS paper

- Added some broader thoughts to the conclusion and punched up the subjective/objective map difference

- Start writing proposal for Bruce

- Simple simulation baseline for model building

- Develop models for

- Extrapolating multivariate (family) values, including error conditions

- Classify errors

- Explainable model, that has sensor inputs drive the controls of the model that produce outputs that are evaluated against the original inputs using RL

- “Safer” ML using Sanhedrin approach

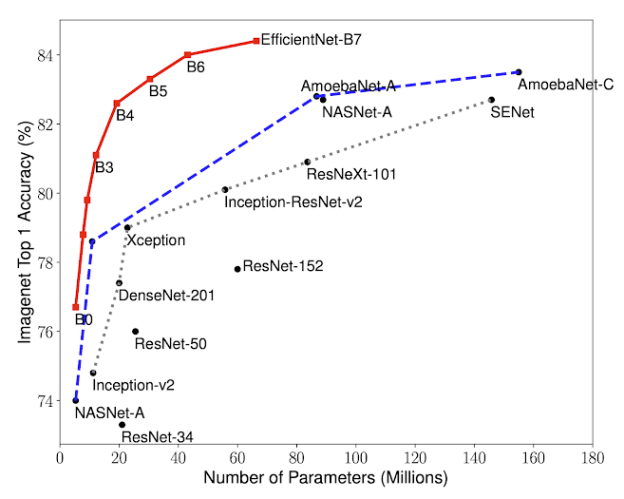

- EfficientNet: Improving Accuracy and Efficiency through AutoML and Model Scaling

- In our ICML 2019 paper, “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”, we propose a novel model scaling method that uses a simple yet highly effective compound coefficient to scale up CNNs in a more structured manner. Unlike conventional approaches that arbitrarily scale network dimensions, such as width, depth and resolution, our method uniformly scales each dimension with a fixed set of scaling coefficients. Powered by this novel scaling method and recent progress on AutoML, we have developed a family of models, called EfficientNets, which superpass state-of-the-art accuracy with up to 10x better efficiency (smaller and faster).

- In our ICML 2019 paper, “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”, we propose a novel model scaling method that uses a simple yet highly effective compound coefficient to scale up CNNs in a more structured manner. Unlike conventional approaches that arbitrarily scale network dimensions, such as width, depth and resolution, our method uniformly scales each dimension with a fixed set of scaling coefficients. Powered by this novel scaling method and recent progress on AutoML, we have developed a family of models, called EfficientNets, which superpass state-of-the-art accuracy with up to 10x better efficiency (smaller and faster).

You must be logged in to post a comment.