7:00 – 4:30ASRC GOES

- Dissertation – Working on the Orientation section, where I compare Moby Dick to Dieselgate

- Uninstalling all previous versions of CUDA, which should hopefully allow 10 to be installed

- Still flailing on getting TF 2.0 working. Grrrrr. Success! Added guide below

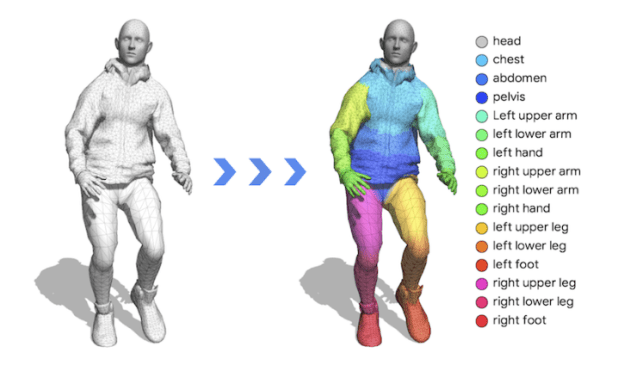

- Spent some time discussing mapping the GPT-2 with Aaron

Installing Tensorflow 2.0rc1 to Windows 10, a temporary accurate guide

- Uninstall any previous version of Tensorflow (e.g. “pip uninstall tensorflow”)

- Uninstall all your NVIDIA crap

- Install JUST THE CUDA LIBRARIES for version 9.0 and 10.0. You don’t need anything else

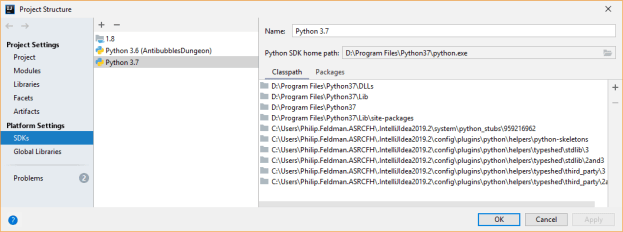

- Then install the latest Nvidia graphics drivers. When you’re done, your install should look something like this (this worked on 9.3.19):

Edit your system variables so that the CUDA 9 and CUDA 10 directories are on your path:

One more part is needed from NVIDIA: cudnn64_7.dll

In order to download cuDNN, ensure you are registered for the NVIDIA Developer Program.

-

- Go to: NVIDIA cuDNN home page

- Click “Download”.

- Remember to accept the Terms and Conditions.

- Select the cuDNN version to want to install from the list. This opens up a second list of target OS installs. Select cuDNN Library for Windows 10.

- Extract the cuDNN archive to a directory of your choice. The important part (cudnn64_7.dll) is in the cuda\bin directory. Either add that directory to your path, or copy the dll and put it in the Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10\bin directory

Then open up a console window (cmd) as admin, and install tensorflow:

- pip install tensorflow-gpu==2.0.0-rc1

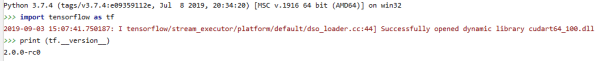

- verify that it works by opening the python console and typing the following:

if that works, you should be able to have the following work:

import tensorflow as tf

print("tf version = {}".format(tf.__version__))

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(x_train, y_train, epochs=5)

model.evaluate(x_test, y_test)

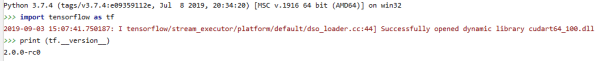

The results should looks something like:

"D:\Program Files\Python37\python.exe" D:/Development/Sandboxes/PyBullet/src/TensorFlow/HelloWorld.py

2019-09-03 15:09:56.685476: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cudart64_100.dll

tf version = 2.0.0-rc0

2019-09-03 15:09:59.272748: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library nvcuda.dll

2019-09-03 15:09:59.372341: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1618] Found device 0 with properties:

name: TITAN X (Pascal) major: 6 minor: 1 memoryClockRate(GHz): 1.531

pciBusID: 0000:01:00.0

2019-09-03 15:09:59.372616: I tensorflow/stream_executor/platform/default/dlopen_checker_stub.cc:25] GPU libraries are statically linked, skip dlopen check.

2019-09-03 15:09:59.373339: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1746] Adding visible gpu devices: 0

2019-09-03 15:09:59.373671: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

2019-09-03 15:09:59.376010: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1618] Found device 0 with properties:

name: TITAN X (Pascal) major: 6 minor: 1 memoryClockRate(GHz): 1.531

pciBusID: 0000:01:00.0

2019-09-03 15:09:59.376291: I tensorflow/stream_executor/platform/default/dlopen_checker_stub.cc:25] GPU libraries are statically linked, skip dlopen check.

2019-09-03 15:09:59.376996: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1746] Adding visible gpu devices: 0

2019-09-03 15:09:59.951116: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1159] Device interconnect StreamExecutor with strength 1 edge matrix:

2019-09-03 15:09:59.951317: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1165] 0

2019-09-03 15:09:59.951433: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1178] 0: N

2019-09-03 15:09:59.952189: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1304] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 9607 MB memory) -> physical GPU (device: 0, name: TITAN X (Pascal), pci bus id: 0000:01:00.0, compute capability: 6.1)

Train on 60000 samples

Epoch 1/5

2019-09-03 15:10:00.818650: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cublas64_100.dll

32/60000 [..............................] - ETA: 17:07 - loss: 2.4198 - accuracy: 0.0938

736/60000 [..............................] - ETA: 48s - loss: 1.7535 - accuracy: 0.4891

1696/60000 [..............................] - ETA: 22s - loss: 1.2584 - accuracy: 0.6515

2560/60000 [>.............................] - ETA: 16s - loss: 1.0503 - accuracy: 0.7145

3552/60000 [>.............................] - ETA: 12s - loss: 0.9017 - accuracy: 0.7531

4352/60000 [=>............................] - ETA: 10s - loss: 0.8156 - accuracy: 0.7744

5344/60000 [=>............................] - ETA: 9s - loss: 0.7407 - accuracy: 0.7962

6176/60000 [==>...........................] - ETA: 8s - loss: 0.7069 - accuracy: 0.8039

7040/60000 [==>...........................] - ETA: 7s - loss: 0.6669 - accuracy: 0.8134

8032/60000 [===>..........................] - ETA: 6s - loss: 0.6285 - accuracy: 0.8236

8832/60000 [===>..........................] - ETA: 6s - loss: 0.6037 - accuracy: 0.8291

9792/60000 [===>..........................] - ETA: 6s - loss: 0.5823 - accuracy: 0.8356

10656/60000 [====>.........................] - ETA: 5s - loss: 0.5621 - accuracy: 0.8410

11680/60000 [====>.........................] - ETA: 5s - loss: 0.5434 - accuracy: 0.8453

12512/60000 [=====>........................] - ETA: 5s - loss: 0.5311 - accuracy: 0.8485

13376/60000 [=====>........................] - ETA: 4s - loss: 0.5144 - accuracy: 0.8534

14496/60000 [======>.......................] - ETA: 4s - loss: 0.4997 - accuracy: 0.8580

15296/60000 [======>.......................] - ETA: 4s - loss: 0.4894 - accuracy: 0.8609

16224/60000 [=======>......................] - ETA: 4s - loss: 0.4792 - accuracy: 0.8634

17120/60000 [=======>......................] - ETA: 4s - loss: 0.4696 - accuracy: 0.8664

17888/60000 [=======>......................] - ETA: 3s - loss: 0.4595 - accuracy: 0.8690

18752/60000 [========>.....................] - ETA: 3s - loss: 0.4522 - accuracy: 0.8711

19840/60000 [========>.....................] - ETA: 3s - loss: 0.4434 - accuracy: 0.8738

20800/60000 [=========>....................] - ETA: 3s - loss: 0.4356 - accuracy: 0.8756

21792/60000 [=========>....................] - ETA: 3s - loss: 0.4293 - accuracy: 0.8776

22752/60000 [==========>...................] - ETA: 3s - loss: 0.4226 - accuracy: 0.8794

23712/60000 [==========>...................] - ETA: 3s - loss: 0.4179 - accuracy: 0.8808

24800/60000 [===========>..................] - ETA: 2s - loss: 0.4111 - accuracy: 0.8827

26080/60000 [============>.................] - ETA: 2s - loss: 0.4029 - accuracy: 0.8849

27264/60000 [============>.................] - ETA: 2s - loss: 0.3981 - accuracy: 0.8864

28160/60000 [=============>................] - ETA: 2s - loss: 0.3921 - accuracy: 0.8882

29408/60000 [=============>................] - ETA: 2s - loss: 0.3852 - accuracy: 0.8902

30432/60000 [==============>...............] - ETA: 2s - loss: 0.3809 - accuracy: 0.8916

31456/60000 [==============>...............] - ETA: 2s - loss: 0.3751 - accuracy: 0.8932

32704/60000 [===============>..............] - ETA: 2s - loss: 0.3707 - accuracy: 0.8946

33760/60000 [===============>..............] - ETA: 1s - loss: 0.3652 - accuracy: 0.8959

34976/60000 [================>.............] - ETA: 1s - loss: 0.3594 - accuracy: 0.8975

35968/60000 [================>.............] - ETA: 1s - loss: 0.3555 - accuracy: 0.8984

37152/60000 [=================>............] - ETA: 1s - loss: 0.3509 - accuracy: 0.8998

38240/60000 [==================>...........] - ETA: 1s - loss: 0.3477 - accuracy: 0.9006

39232/60000 [==================>...........] - ETA: 1s - loss: 0.3442 - accuracy: 0.9015

40448/60000 [===================>..........] - ETA: 1s - loss: 0.3393 - accuracy: 0.9030

41536/60000 [===================>..........] - ETA: 1s - loss: 0.3348 - accuracy: 0.9042

42752/60000 [====================>.........] - ETA: 1s - loss: 0.3317 - accuracy: 0.9049

43840/60000 [====================>.........] - ETA: 1s - loss: 0.3288 - accuracy: 0.9059

44992/60000 [=====================>........] - ETA: 1s - loss: 0.3255 - accuracy: 0.9069

46016/60000 [======================>.......] - ETA: 0s - loss: 0.3230 - accuracy: 0.9077

47104/60000 [======================>.......] - ETA: 0s - loss: 0.3203 - accuracy: 0.9085

48288/60000 [=======================>......] - ETA: 0s - loss: 0.3174 - accuracy: 0.9091

49248/60000 [=======================>......] - ETA: 0s - loss: 0.3155 - accuracy: 0.9098

50208/60000 [========================>.....] - ETA: 0s - loss: 0.3131 - accuracy: 0.9105

51104/60000 [========================>.....] - ETA: 0s - loss: 0.3111 - accuracy: 0.9111

52288/60000 [=========================>....] - ETA: 0s - loss: 0.3085 - accuracy: 0.9117

53216/60000 [=========================>....] - ETA: 0s - loss: 0.3066 - accuracy: 0.9121

54176/60000 [==========================>...] - ETA: 0s - loss: 0.3043 - accuracy: 0.9128

55328/60000 [==========================>...] - ETA: 0s - loss: 0.3018 - accuracy: 0.9135

56320/60000 [===========================>..] - ETA: 0s - loss: 0.2995 - accuracy: 0.9141

57440/60000 [===========================>..] - ETA: 0s - loss: 0.2980 - accuracy: 0.9143

58400/60000 [============================>.] - ETA: 0s - loss: 0.2961 - accuracy: 0.9148

59552/60000 [============================>.] - ETA: 0s - loss: 0.2941 - accuracy: 0.9154

60000/60000 [==============================] - 4s 65us/sample - loss: 0.2930 - accuracy: 0.9158

... epochs pass ...

10000/1 [==========] - 1s 61us/sample - loss: 0.0394 - accuracy: 0.9778

You must be logged in to post a comment.