ASRC GOES 7:00 – 4:30

- Dissertation

- Conclusions – starting the hypothesis section

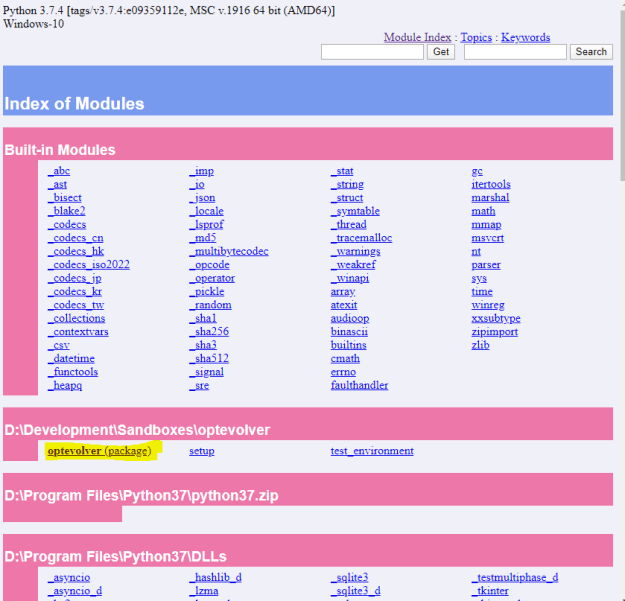

- Evolver

- Finish documentation

- Add session_clear() to TF2OptimizationTest

- Deploy to PiPy

- Folowed a mix of these directions:

- How to upload your python package to PyPi

- How to Publish an Open-Source Python Package to PyPI

- Packaging and distributing projects

- The legacy test upload doesn’t work, but

twine upload dist/*

did create the project. And optevolver is on pypi!

- Need to set the develop branch to the main branch. Monday

- AIMS NextGen

- Isaac made really nice progress on the Sim + ML plan

- Sent him the schedule

You must be logged in to post a comment.