7:00 – 4:30 ASRC

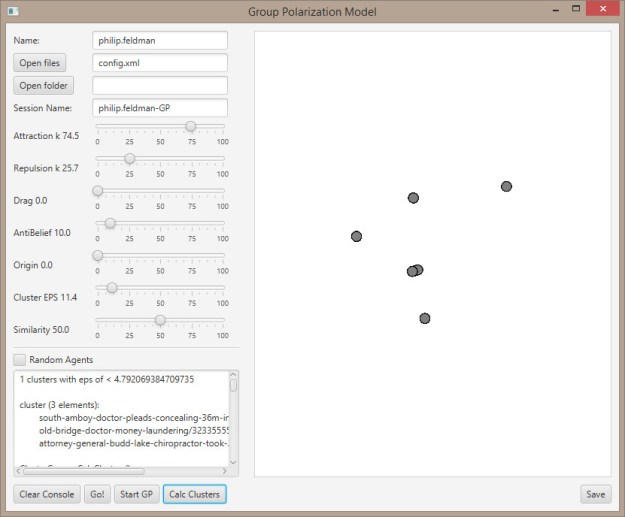

- Had some thoughts last night about how flocking at different scales in Hilbert space might work. Flocks built upon flocks. There is some equivalent of mass and velocity, where mass might be influence (positive and negative attraction). Velocity is related to how fast beliefs change.

- Also thought about maps some more, weather maps in particular. A weather map maintains a coordinate frame, even though nothing in that frame is stable. Something like this, with a sense of history (playback of the last X years) could provide an interesting framework for visualization.

- Continuing Novelty Learning via Collaborative Proximity Filtering review. Done! Need to submit both now.

- Adding StrVec to the ARFF outputs – done

- Starting this tutorial on Nonnegative Matrix Factorization

- These slides are also very nice

- Working on building JSON files for loading CI

- Meeting about Healthdatapalooza

You must be logged in to post a comment.