7:00 – 5:00 ASRC IRAD

- Got the parser to the point that it’s creating query strings, but I need to escape the text properly

- Created and ab_slack mysql db

- Added “parent_id” and an auto increment ID to any of the arrays that are associated with the Slack data

- Reviewing sections 1-3 – done

- Figure out some past performance – done

- Work on the CV. Add the GF work and A2P ML work. – done

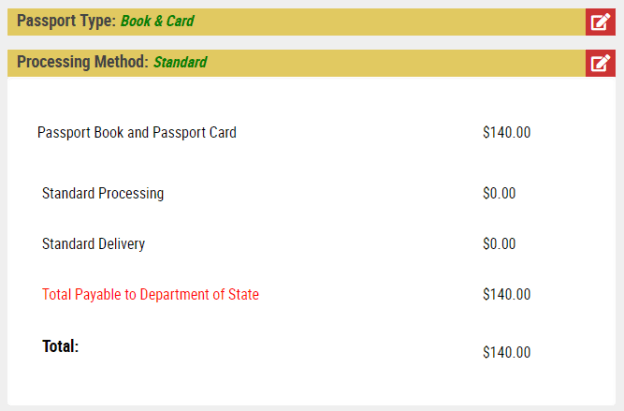

- Start reimbursement for NJ trip

- Accidentally managed to start a $45/month subscription to the IEEE digital library. It really reeks of deceptive practices. There is nothing on the subscription page that informs you that this is a $45/month, 6-month minimum purchase. I’m about to contact the Maryland deceptive practices people to see if there is legal action that can be brought

You must be logged in to post a comment.