Listening to the On Being interview with angel Kyodo williams

We are in this amazing moment of evolving, where the values of some of us are evolving at rates that are faster than can be taken in and integrated for peoples that are oriented by place and the work that they’ve inherited as a result of where they are.

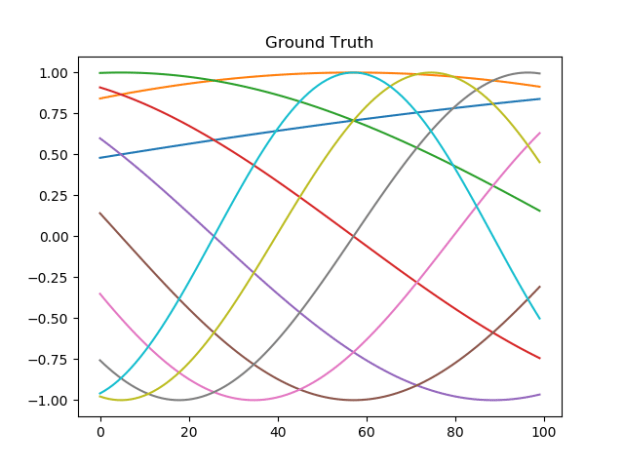

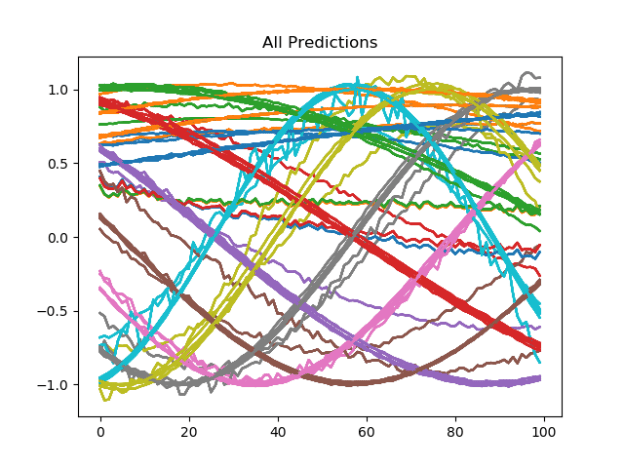

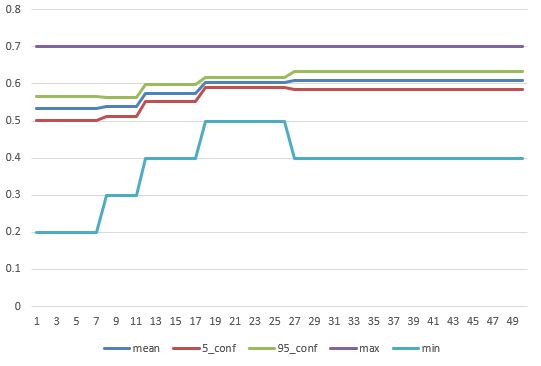

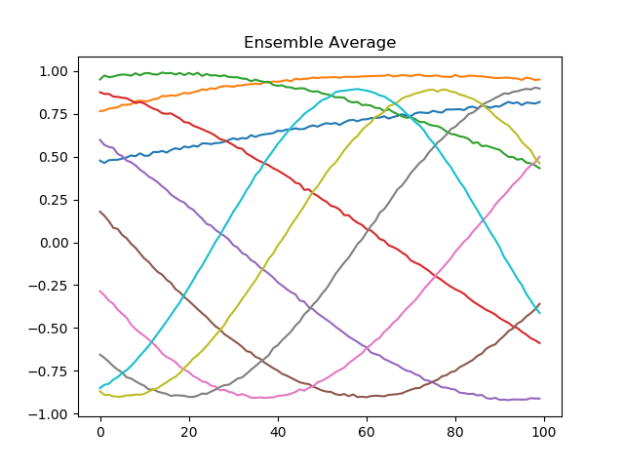

This really makes me think of the Wundt curve (FMRI analysis here?), and how misalignment between a bourgeoisie class (think elites) and a proletariat class. Without day-to-day existence constraints, it’s possible for elites to move individually and in small groups through less traveled belief spaces. Proletarian concerns are have more “red queen” elements, so you need larger workers movements to make progress.

You must be logged in to post a comment.