Research about one hour

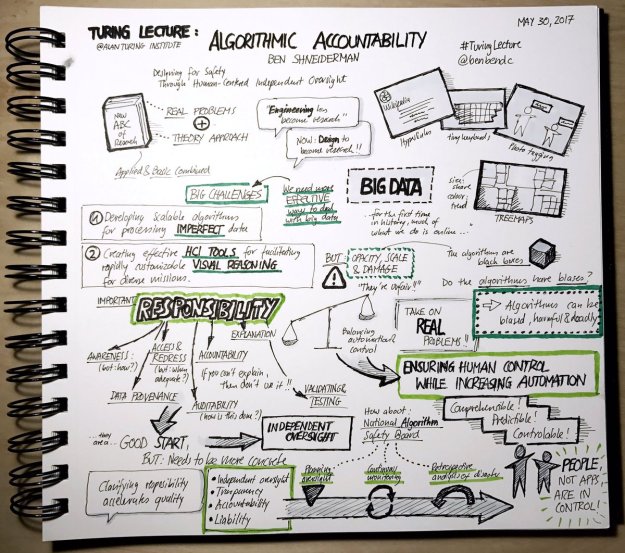

- Broke apart the assets for the CI 2017 poster V2. What about the relationships from the P&C considered harmful outline? That’s not in the abstract…

Research about one hour

Research (2 hours)

7:00 – 8:00 Research

9:00 – 4:00 BRI

7:00 – 8:00 Research

8:30 – 4:00 BRI

7:00 – 8:30 Research

9:00 – 4:00 BRI

7:30 – 12:00 Research

12:30 – 4:00 BRC

Sand Spring Bank

Ohio RV

7:00 – 8:00 Research

8:30 – 5:00 BRC

7:00 – 8:30 Research

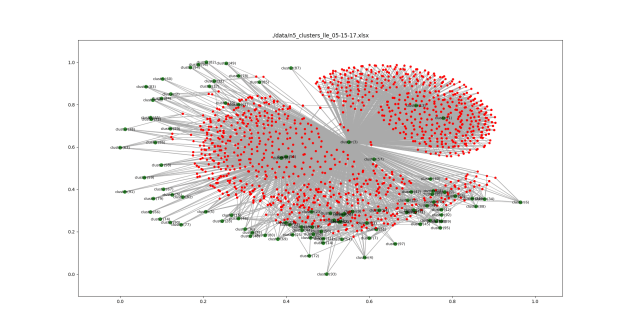

Add a picture of a network next to each item that reflects the polarization level. Maybe gray out the non-abstract bits.

Add a picture of a network next to each item that reflects the polarization level. Maybe gray out the non-abstract bits.9:00 – 5:30 BRC

7:00 – 8:00 Research

8:30 – 5:00 BRC

Call acorn inn!

bike brake lights!

Google’s New AI Is Better at Creating AI Than the Company’s Engineers

7:00 – 8:00 Research

8:30 – 2:30 BRC

7:30 – 4:00 BRC

Research

7:00 – 8:00 Research

8:30 – 3:45 BRC

7:00 – 8:00 Research

8:30 – 4:30 BRC

7:00 – 8:00 Research

9:00 – 5:00 BRC

Well, the weekend ended on a sad, down note. Having problems getting motivated.

7:00 – 8:00 Research

8:30 – 8:00PM BRC

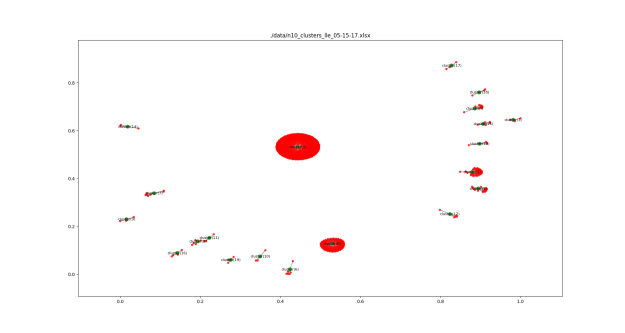

but by adjusting the hyperparameter ‘neighbors’ from 5 (above) to 10 (below), we get a completely different result:

but by adjusting the hyperparameter ‘neighbors’ from 5 (above) to 10 (below), we get a completely different result:  Here, you can see that no cluster shared its nodes with any other cluster. That’s what we want. Stable, but with good granularity.

Here, you can see that no cluster shared its nodes with any other cluster. That’s what we want. Stable, but with good granularity. It’s possible to see that several items that were in cluster (0) distribute out when gender don’t override associated clusters.

It’s possible to see that several items that were in cluster (0) distribute out when gender don’t override associated clusters.size

stable/total

list of stable

You must be logged in to post a comment.