I think this is pretty good:

Are conspiracy theories what mysticism looks like when it adopts the trappings of rationalism?

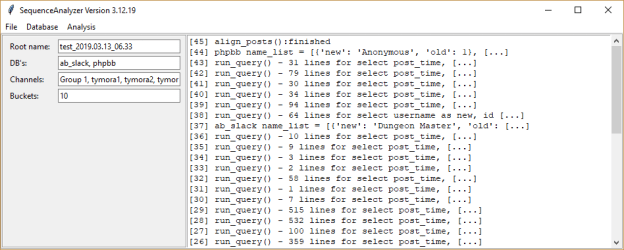

Added some exception handling for the sql calls:

except pymysql.err.OperationalError as oe:

messagebox.showerror("pymysql.err.OperationalError", oe.args)

return None

Downloaded the new Slack files. Had to handle the presence of gifs, and a few other db issues. Everything continues to look good.

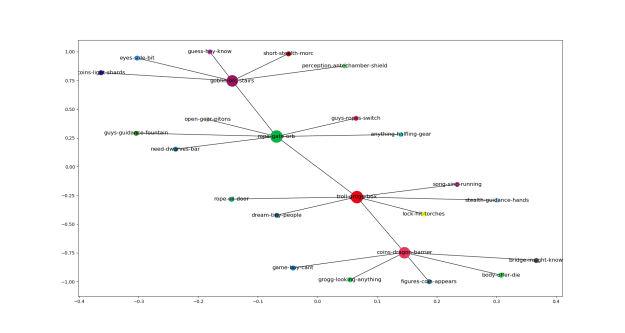

Working on building the table, then the map. Here’s the first shot.

split_1 places: ['goblin', 'orc', 'stairs', 'vines', 'see', 'hit', 'arrow', 'fire', 'gate', 'behind']

splt-chn_1of4-tymora1 spaces: ['guys', 'temple', 'guess', 'damage', 'hey', 'spell', 'axe', 'know', 'trees', 'move', 'come']

splt-chn_1of4-tymora2 spaces: ['short', 'log', 'attack', 'feet', 'stealth', 'damage', 'morc', 'temple', 'axe', 'top', 'mindy']

splt-chn_1of4-tymora3 spaces: ['coins', 'light', 'damage', 'shards', 'glass', 'steps', 'floor', 'need', 'poison', 'bow', 'sacred']

splt-chn_1of4-tymora4 spaces: ['spell', 'glass', 'feet', 'perception', 'antechamber', 'shield', 'cast', 'hand', 'log', 'move', 'beyond']

splt-chn_1of4-Group 1 spaces: ['eyes', 'bow', 'side', 'bit', 'looking', 'carefully', 'halfling', 'arrows', 'near', 'beyond', 'hand']

common elements: set()

------------------------

split_2 places: ['rope', 'gate', 'orb', 'statues', 'across', 'see', 'side', 'around', 'pit', 'feet']

splt-chn_2of4-tymora1 spaces: ['something', 'perception', 'hand', 'statue', 'floor', 'guys', 'ropes', 'switch', 'wall', 'tie', 'pull']

splt-chn_2of4-tymora2 spaces: ['tie', 'wall', 'open', 'lever', 'gear', 'trap', 'hand', 'pitons', 'lightning', 'javelin', 'cross']

splt-chn_2of4-tymora3 spaces: ['lever', 'trap', 'metal', 'need', 'floor', 'perception', 'statue', 'dwarves', 'hand', 'bar', 'large']

splt-chn_2of4-tymora4 spaces: ['trap', 'guys', 'guidance', 'metal', 'statue', 'fountain', 'perception', 'appears', 'investigate', 'spell', 'coins']

splt-chn_2of4-Group 1 spaces: ['anything', 'metal', 'halfling', 'gear', 'close', 'something', 'spell', 'statue', 'range', 'trap', 'hand']

common elements: set()

------------------------

split_3 places: ['troll', 'grogg', 'box', 'chest', 'hand', 'open', 'gate', 'club', 'see', 'want']

splt-chn_3of4-tymora1 spaces: ['rope', 'oil', 'fire', 'door', 'sleep', 'maybe', 'trolls', 'key', 'nice', 'around', 'feet']

splt-chn_3of4-tymora2 spaces: ['dream', 'tiny', 'people', 'sleep', 'groggs', 'thing', 'eyes', 'turn', 'lets', 'need', 'group']

splt-chn_3of4-tymora3 spaces: ['attack', 'damage', 'key', 'fire', 'need', 'lock', 'hit', 'torches', 'attacks', 'dwarves', 'know']

splt-chn_3of4-tymora4 spaces: ['spell', 'stealth', 'guidance', 'sleep', 'hands', 'burning', 'cast', 'ready', 'attack', 'damage', 'key']

splt-chn_3of4-Group 1 spaces: ['song', 'sing', 'trolls', 'running', 'feather', 'sleeping', 'looking', 'need', 'creature', 'come', 'face']

common elements: set()

------------------------

split_4 places: ['coins', 'dragon', 'barrier', 'platform', 'something', 'light', 'woman', 'see', 'eyes', 'treasure']

splt-chn_4of4-tymora1 spaces: ['blue', 'figures', 'enormous', 'coin', 'piles', 'appears', 'friend', 'around', 'full', 'wyrm', 'shall']

splt-chn_4of4-tymora2 spaces: ['body', 'enormous', 'across', 'must', 'offer', 'mortal', 'gold', 'piles', 'die', 'near', 'within']

splt-chn_4of4-tymora3 spaces: ['bridge', 'choose', 'insight', 'blue', 'know', 'gold', 'bridges', 'appears', 'die', 'enormous', 'game']

splt-chn_4of4-tymora4 spaces: ['stay', 'across', 'must', 'dragons', 'game', 'boy', 'enormous', 'gold', 'piles', 'cant', 'hear']

splt-chn_4of4-Group 1 spaces: ['grogg', 'looking', 'anything', 'thing', 'troll', 'dragons', 'moment', 'gate', 'gold', 'breath', 'magical']

common elements: set()

A really interesting thing happened in the run last night. There was an argument about the shared perception of the belief space in the troll room. This misalignment was with the DM (the longer smear), who is kind of like a ship’s captain and is the final authority of the dungeon. When the player resists adhering with the DM’s perception of the belief space, the DM threatens expulsion. Some explorers are born, but involuntary explorers can be made:

You must be logged in to post a comment.