Amid a tense meeting with protesters, Portland Mayor Ted Wheeler tear-gassed by federal agents

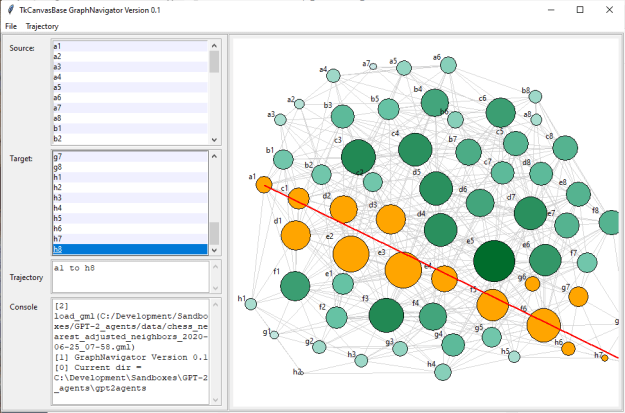

GPT-2 Agents

- Good back-and-forth with Antonio about venues

- It struck me that statistical tests about fair dice might give me a way of comparing the two populations. Pieces are roughly equivalent to dice sides. Looking at this post on the RPG Stackexchange. That led me to Pearson’s Chi-square test (which rang a bell as the sort of test I might need).

- Success! Here’s the code:

from scipy.stats import chisquare, chi2_contingency from scipy.stats.stats import pearsonr import pandas as pd import numpy as np gpt = [51394, 25962, 19242, 23334, 15928, 19953] twic = [49386, 31507, 28263, 31493, 22818, 23608] z, p = chisquare(f_obs=gpt,f_exp=twic) print("z = {}, p = {}".format(z, p)) ar = np.array([gpt, twic]) print("\n",ar) df = pd.DataFrame(ar, columns=['pawns', 'rooks', 'bishops', 'knights', 'queen', 'king'], index=['gpt-2', 'twic']) print("\n", df) z,p,dof,expected=chi2_contingency(df, correction=False) print("\nNo correction: z = {}, p = {}, DOF = {}, expected = {}".format(z, p, dof, expected)) z,p,dof,expected=chi2_contingency(df, correction=True) print("\nCorrected: z = {}, p = {}, DOF = {}, expected = {}".format(z, p, dof, expected)) cor = pearsonr(gpt, twic) print("\nCorrelation = {}".format(cor)) - Here’s the results:

"C:\Program Files\Python\python.exe" C:/Development/Sandboxes/GPT-2_agents/gpt2agents/analytics/pearsons.py z = 8696.966788178523, p = 0.0 [[51394 25962 19242 23334 15928 19953] [49386 31507 28263 31493 22818 23608]] pawns rooks bishops knights queen king gpt-2 51394 25962 19242 23334 15928 19953 twic 49386 31507 28263 31493 22818 23608 No correction: z = 2202.2014776980245, p = 0.0, DOF = 5, expected = [[45795.81128532 26114.70012657 21586.92215826 24914.13916789 17606.71268169 19794.71458027] [54984.18871468 31354.29987343 25918.07784174 29912.86083211 21139.28731831 23766.28541973]] Corrected: z = 2202.2014776980245, p = 0.0, DOF = 5, expected = [[45795.81128532 26114.70012657 21586.92215826 24914.13916789 17606.71268169 19794.71458027] [54984.18871468 31354.29987343 25918.07784174 29912.86083211 21139.28731831 23766.28541973]] Correlation = (0.9779452546334226, 0.0007242538456558558) Process finished with exit code 0 - It might be time to start writing this up!

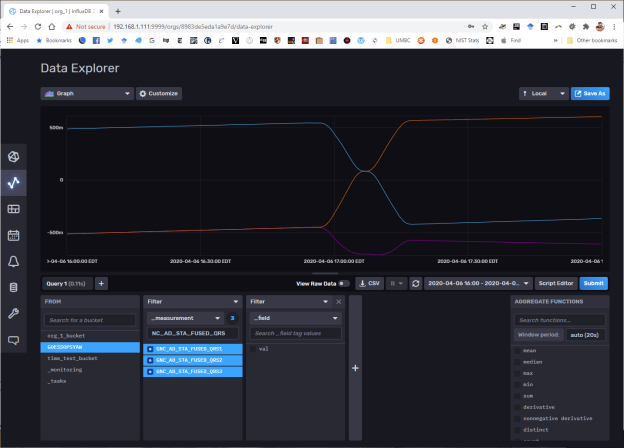

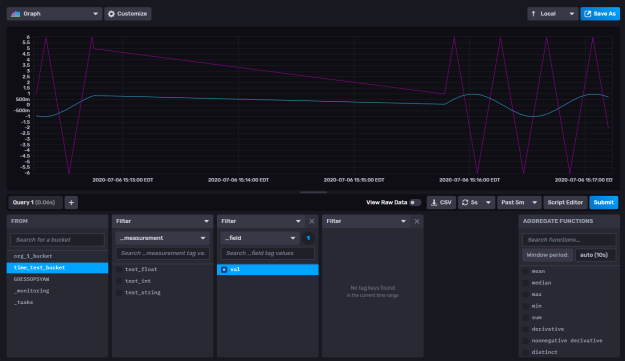

GOES

- Found vehicle orientation mnemonics: GNC_AD_STA_FUSED_QRS#

- 11:00 Meeting with Erik and Vadim about schedules. Erik will send an update. The meeting went well. Vadim’s going to exercise the model through a set of GOTO ANGLE 90 / GOTO ANGLE 0 for each of the rwheels, and we’ll see how they map to the primary axis of the GOES

You must be logged in to post a comment.