Learning to predict the cosmological structure formation

- Matter evolved under the influence of gravity from minuscule density fluctuations. Nonperturbative structure formed hierarchically over all scales and developed non-Gaussian features in the Universe, known as the cosmic web. To fully understand the structure formation of the Universe is one of the holy grails of modern astrophysics. Astrophysicists survey large volumes of the Universe and use a large ensemble of computer simulations to compare with the observed data to extract the full information of our own Universe. However, to evolve billions of particles over billions of years, even with the simplest physics, is a daunting task. We build a deep neural network, the Deep Density Displacement Model (D3M3), which learns from a set of prerun numerical simulations, to predict the nonlinear large-scale structure of the Universe with the Zel’dovich Approximation (ZA), an analytical approximation based on perturbation theory, as the input. Our extensive analysis demonstrates that D3MD3M outperforms the second-order perturbation theory (2LPT), the commonly used fast-approximate simulation method, in predicting cosmic structure in the nonlinear regime. We also show that D3MD3M is able to accurately extrapolate far beyond its training data and predict structure formation for significantly different cosmological parameters. Our study proves that deep learning is a practical and accurate alternative to approximate 3D simulations of the gravitational structure formation of the Universe.

GPT-Agents

- Generating content for the small-corpora models. 6k is done, working on 3k done

- Generated sentiment

- Do this to speed up the load of a mysql database (via stackoverflow)

mysql> use db_name;

mysql> SET autocommit=0 ; source the_sql_file.sql ; COMMIT ;

- 3:00 Meeting

- https://www.pnas.org/authors/submitting-your-manuscript – set up a paper repo in Overleaf and start to rough out

- Need to get the spreadsheets built for the 3k and 6k models

- Build a spreadsheet (and template?) for the LWIWC data

- Sent Shimei reviews from the 50k, 25k, 12k, 6k, and 3k models

- One of the really observable results is that the model tends to amplify the number of items that exist in larger quantities in the training corpora and reduce the number of items that are less common in the corpora. However, the tokens within a review seem to be unchanged. The average number of stars associated with a POSITIVE or NEGATIVE review seem very resilient.

SBIRs

- Meeting with Zach – nope, need to delay until tomorrow

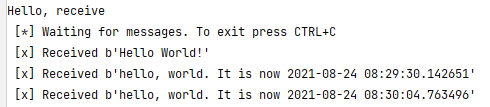

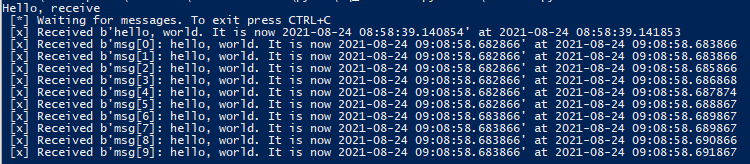

- Trying to get RabbitMQ working

- Had to install Erlang from here: www.erlang.org

- Installed RabbitMQ from here: www.rabbitmq.com/install-windows.html

- Doing the Python tutorial from here: rabbitmq.com/tutorials/tutorial-one-python.html

- Seems to be working?

- Writing the consumer

- That’s working too!

- Seems plenty speedy when batched up, too

- 9:15 standup

- 1:00 Meeting about the sim for ARL. Going to talk about missile command, where the physics are simple, but the tactics are difficult.

Book

- Clean up chapter thumbnails. Done!

You must be logged in to post a comment.