Made a log of progress on Killer Apps. Finished Detection, Disruption, and Attacks and Counterattacks

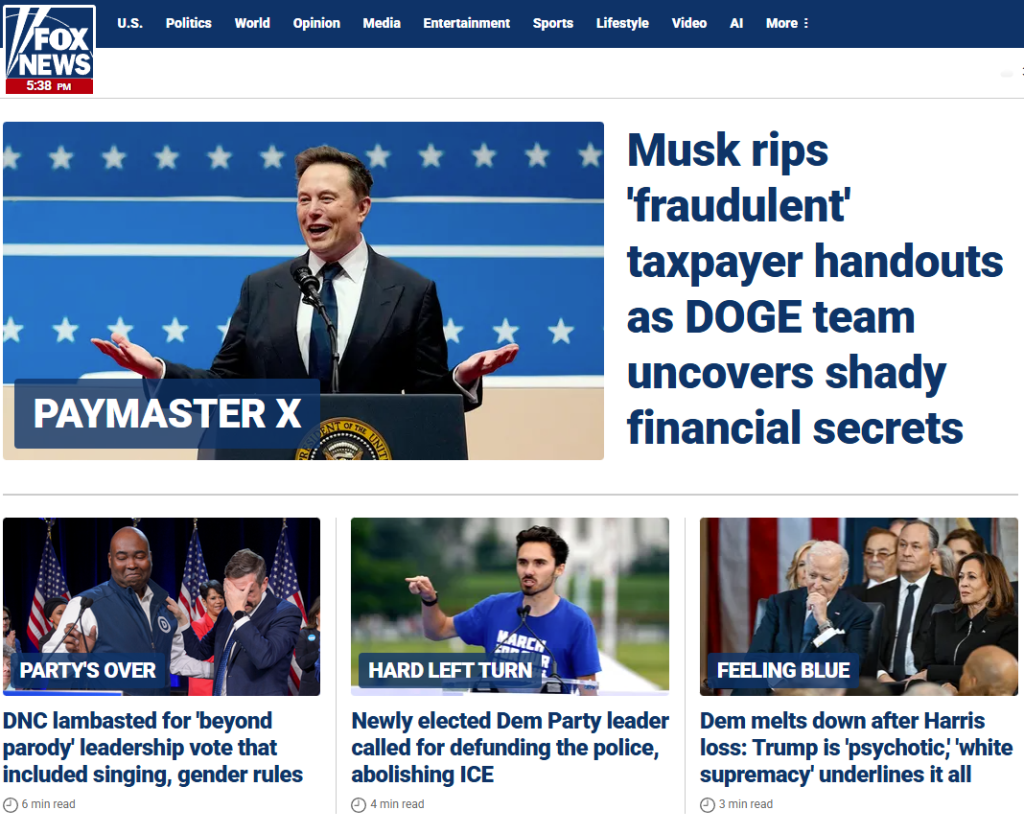

Meanwhile, of the Fox News home page:

The census.gov website is dead:

Tasks

More root stuff

Copyright Office Releases Part 2 of Artificial Intelligence Report

Made a gif of a root growing as a metaphor for an LLM generating text from the same prompt four times (from this video):

P33

GPT Agents

SBIRs

The Ignite thing went well – it looks like it should be fun! Need to see how to get a webpage running in PPT that works with an LLM

Did some more work on P33

SBIRs

GPT Agents

Need to reach out to Markus Schneider for the Trustworthy Information proposal – done!

SBIRs

Made some progress on P33. Need to reach out to Manlio De Domenico on that? Also Markus Schneider for the Trustworthy Information proposal

So here’s an interesting thing. There is a considerable discourse about how AI is wrecking the environment. It is absolutely true that there are more datacenters getting made and they – on average – use a lot of water and a good deal of energy.

But there are a lot of worse offenders. Data centers consume about 4.5% of electricity in the US. That’s for everything. AI, the WordPress instance that you are reading now, Netflix streaming gigabytes of data per second – everything.

But there are much bigger energy users. To generate enough tokens for the entire Lord of the Rings trilogy, a LLama3 model probably uses about 5 watt/hours. Transportation – a much larger energy consumer shows how small this is. A Tesla Model 3 could manage to go about 25 feet, or a bit under 10 meters. Transportation, manufacturing, and energy production use a lot more energy:

Source: Wikipedia

If you want to make some changes in energy consumption. Go after small improvements in the big consumers. Reduce energy consumption in say, electricity production (37%) by doubling solar, and that’s the equivalent of cutting the power use of AI by 50%.

In addition to energy consumption, data centers require cooling. And they use a lot, though that is steadily being optimized down. On average a data center uses about 32 million gallons of water for cooling.

Sounds like a lot, right?

Let’s look at the oil and gas industry. The process of fracking, where water is injected at high pressure into oil and gas containing rock from about 2,500 wells uses about 11 million gallons to produce crude oil. So data centers are worse that fracking!

But hold on. You still have to process that oil. And it turns out that for every barrel of oil refined in the US, about 1.5 barrels of water are used. The USA refines about 5.5 billion barrels of oil per year. Combine that with the fracking numbers and the oil and gas industry uses about 500 billion gallons of water per year, or 5 times the amount of data centers doing all the things data centers do, including AI.

To keep this short, we are not going to even talk about the water use of agriculture here.

So why all the ink spilled to talk about this. Well, AI is new and it gets clicks, but I went to look at google trends to see how the discussion of water use for AI and Fracking, and I got an interesting relationship:

The amount of discussion about Fracking in this case has leveled off as the discussion of AI has taken off. And given the history that the oil industry has in generating FUD (fear, uncertainty and doubt), I would not be in the least surprised if it turns out that the oil industry is fueling the moral panic about AI to distract us from the real dangers and to keep those businesses profitable.

Killer Apps

SBIRs

Meta has been busy:

Llama Stack defines and standardizes the core building blocks that simplify AI application development. It codified best practices across the Llama ecosystem. More specifically, it provides

P33

Killer Apps book

Made some progress on P33. Need to reach out to Manlio De Domenico on that? Also Markus Schneider for the Trustworthy Information proposal

Tasks

Got a good start on the Project 2033 doc

Chores

SBIRs

I think it’s a great time to re-think what a resilient representative democracy in the context of global, instantaneous, communication and smart machines would look like. I think that it is fair to argue that the run for liberal democracy (1945 – 2008) has become exhausted. One of the reasons that it no longer appears to have traction is that it takes a lot of work to get tangibly better living for many people. For this and other, more structural reasons (e.g. media ownership by the rich), The autocratic and authoritarian systems are winning globally.

So.

We need to figure out what structures an egalitarian system needs to thrive and work to implement them. This is the time to do it, and we have years to work it out while <waves hands> all this plays out..

My working title for this concept is… Project 2033

Assume existing power structures on the left become irrelevant over the next 2-6 years and it’s as bad as you think. People will tire of all the “winning,” and we need to have a plan in hand that looks attractive to (most) people who really just want something better than where they are now.*

* Now will be much worse in 2-6 years so this will be an easier pitch

SBIRs

I took a rough stab at what tokens cost today bases on working out the cost of a token per Watt-hour on a model like the 70B parameter LLama3 model if it were run on an Nvidia GeForce RTX 4090. Here are my estimates for some pretty hefty books, if a LLM were to generate the same number of words:

It’s not much! My sense is that most interactions use a small fraction of a watt-hour, and a bug TPU like the A-100 is probably even more efficient than and RTX 4090. So if you are paying $20/month for a big model, unless you generate something like four War-and-Peace-like mountains of text, the companies are making a profit. The spreadsheet is here, if you’d like to play with it:

SBIRs

GPT Agents

This is really interesting – from Instagram this morning. Need to add it to the trustworthy information proposal:

For comparison, here are Wikipedia page views for Democrat and Republican, along with the disambiguated pages for the parties in the United states, from election day to the inauguration. The number in the legend is the cumulative views for that period.

Instagram is doing some seriously untrustworthy things. Need to update the proposal to include this.

NBC is also manipulating things (via BlueSky)

And if you look at the audience at the time he does it, you can see that some recognize what it is. And they are thrilled:

Vacation plane tix!

SBIRs

Trying to decide if I want to watch the Washington Post whither away or switch to the Guardian

Found these two items on The Decoder:

Compile and run Joseph Weizenbaum’s original 1965 code for ELIZA on CTSS, using the s709 IBM 7094 emulator. (GitHub)

Got the Senate testimony chapter finished yesterday. Today I start working through the analysis. Also, I need to add this to the vignette 1 analysis. And to the slide deck for the talk. Maybe even start with it.

4:30 Dentist

Really good example of AI slop and its consequences.

Tasks

Tasks

SBIRs

You must be logged in to post a comment.