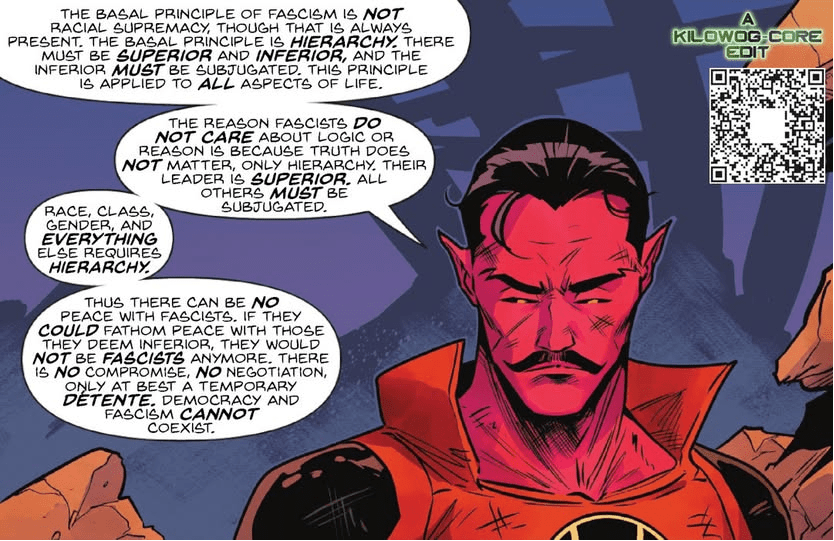

I write an entire book on this, and they do it in a comic panel. Tip of the hat.

Tasks

- Guardian (done) and BGE Home (will call back?)

- Shovel

- Disassemble desk

- Put computers on floor or dining table

- Goodwill

- More boxes (diplomas)

- Pack up closets

- Pack up the basement

- Drop off Bennie

You must be logged in to post a comment.