Cleats and fenders!

SBIRs

- Sprint planning – done. Kinda forgot that I was going to take 3 days off for PTO. Oops

- Slides! Good progress

May! Lovely weather today

Had an interesting talk with Aaron that moved my thinking forward on LLMs as life forms.

It’s not the LLMs – that’s the substrate

The living process is the prompt. Which feeds back on itself. Prompt grow interactively, in a complex way based (currently) on the previous text in the prompt. The prompt is ‘living information’ that can adapt based on additions to the prompt, as occurs in chat.

SBIRs

GPT Agents

MPT-7B

MPT-7B is a decoder-style transformer pretrained from scratch on 1T tokens of English text and code. This model was trained by MosaicML and is open-sourced for commercial use (Apache-2.0).

MPT-7B is part of the family of MosaicPretrainedTransformer (MPT) models, which use a modified transformer architecture optimized for efficient training and inference.

These architectural changes include performance-optimized layer implementations and the elimination of context length limits by replacing positional embeddings with Attention with Linear Biases (ALiBi). Thanks to these modifications, MPT models can be trained with high throughput efficiency and stable convergence. MPT models can also be served efficiently with both standard HuggingFace pipelines and NVIDIA’s FasterTransformer.

This model uses the MosaicML LLM codebase, which can be found in the llm-foundry repository. It was trained by MosaicML’s NLP team on the MosaicML platform for LLM pretraining, finetuning, and inference.

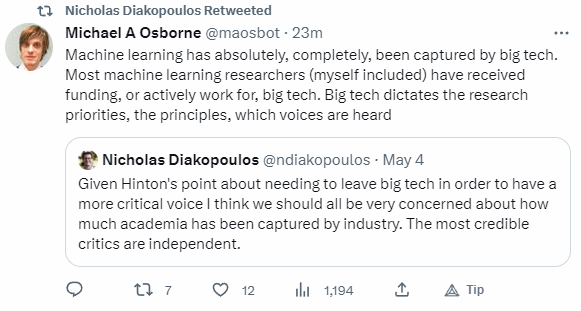

What other agendas should there be for ML? What role should government play in developing models for the common good? For the common defense?

SBIRs

Went to the USNA Capstone day yesterday, which was fun. Except for when the bus broke.

I’ve been reading Metaphors we live by. It’s central idea is that most of our communication is based on metaphors – that GOOD IS UP, IDEAS ARE FOOD, or TIME IS AN OBJECT. Because we are embodied beings in a physical world, the irreducible foundation of the metaphors we use are physically based – UP/DOWN, FORWARD/BACK, NEAR/FAR, etc.

This makes me think of LLMs, which are so effective at communicating with us that it is very easy to believe that they are intelligent – AI. But as I’m reading the book, I wonder if that’s the right framing. I don’t think that these systems are truly intelligent in the way that we can be (some of the time). I’m beginning to think that they may be alive though.

Life as we understand it emerges from chemistry following complex rules. Once over a threshold, living things can direct their chemistry to perform actions. That in turn leads to physical embodiment and the irreducible concept of up.

Deep neural networks could be regarded as a form of digital chemistry. Simple systems (e.g. logic gates) are used to create more complex systems adders and multipliers. Add a lot of time, development, and data and you get large language models that you can chat with.

The metaphor of biochemistry seems to be emerging in the words we use to describe how these models behave – data can be poisoned or refined. Prompt creation and tuning is not like traditional programming. Words are added and removed to produce the desired behavior more in the way that alchemists worked with their compounds or that drug researchers work with animal models.

These large (foundational) models are true natives of the digital information domain. They are now producing behavior that is not predictable based on the inputs in the way that arithmetic can be understood. Their behavior is more understandable in aggregate – use the same prompt 1,000 times and your get a distribution of responses. That’s more in line with how living things respond to a stimulus.

I think if we reorient ourselves from the metaphor that MACHINES ARE INTELLIGENT to MACHINES ARE EARLY LIFE, we might find ourselves in a better position to understand what is currently going on in machine learning and make better decisions about what to do going forward.

Metaphorically, of course.

SBIRs

Need to set up a time to drop of the work box to get more drive space while I’m riding the Eastern Shore

Drop off the truck!

I think I have a chart that explains somewhat how red states can easily avoid action on gun violence. It’s the number of COVID-19 deaths vs. gun deaths in Texas. This is a state that pushed back very hard about any public safety measures for the pandemic, and that was killing roughly 10 times more citizens. I guess the question is “how many of which people will prompt state action? For anything?”

For comparison purposes, Texas had almost 600,000 registered guns in 2022 out of a population of 30 million, or just about 2% of the population if distributed evenly (source). This is probably about 20 times too low, since according to the Pew Center, gun ownership in Texas is about 45%. That percentage seems to be enough people to prevent almost any meaningful action on gun legislation. Though that doesn’t prevent the introduction of legislation to mandate bleeding control stations in schools in case of a shooting event.

So something greater than 2% and less than 45%. Just based on my research, I’d guess something between 10%-20% mortality would be acted on, as long as the demographics of the powerful were affected in those percentages.

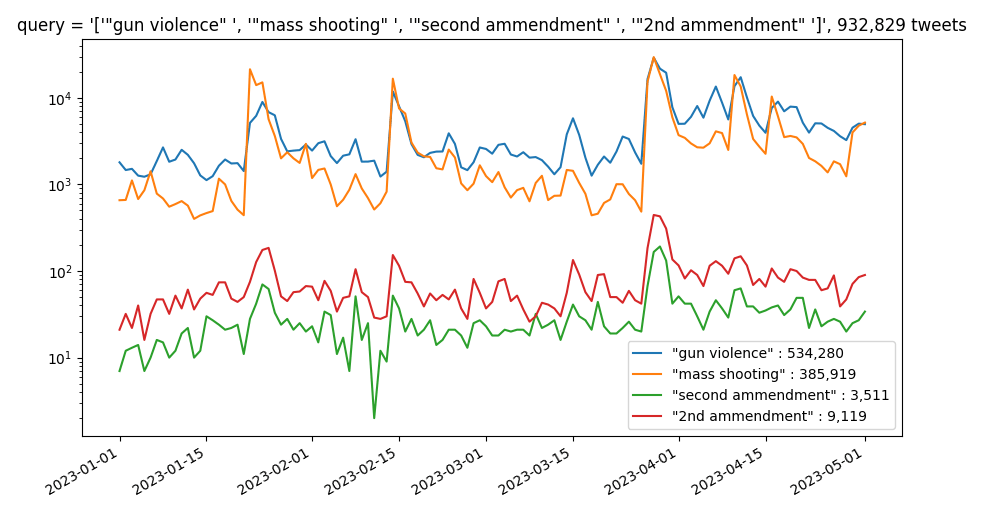

BTW, the wordpress bot just published this to twitter, so that part is still working? And since that is working, here’s a plot:

Gee, I wonder what happened where all those spikes are.

Jsonformer: A Bulletproof Way to Generate Structured JSON from Language Models.

SBIRs

GPT Agents

You must be logged in to post a comment.