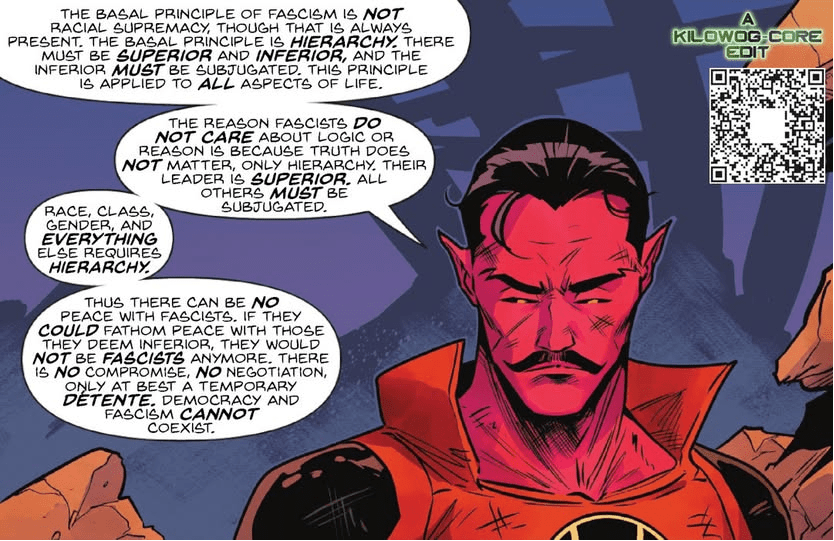

I write an entire book on this, and they do it in a comic panel. Tip of the hat.

Tasks

- Pack up the basement

- Drop off Bennie

I write an entire book on this, and they do it in a comic panel. Tip of the hat.

Tasks

Tasks

SBIRS

Back from cycling in Mallorca – that was a lot of fun

Tasks

SBIRs

Another trip around the sun!

Having fun riding around in Mallorca, not seeing snow

Worked on the adjustments to the proposal to the KA book , which I guess I should now be referring to the WGAI book. Need to send a note to Aaron – done

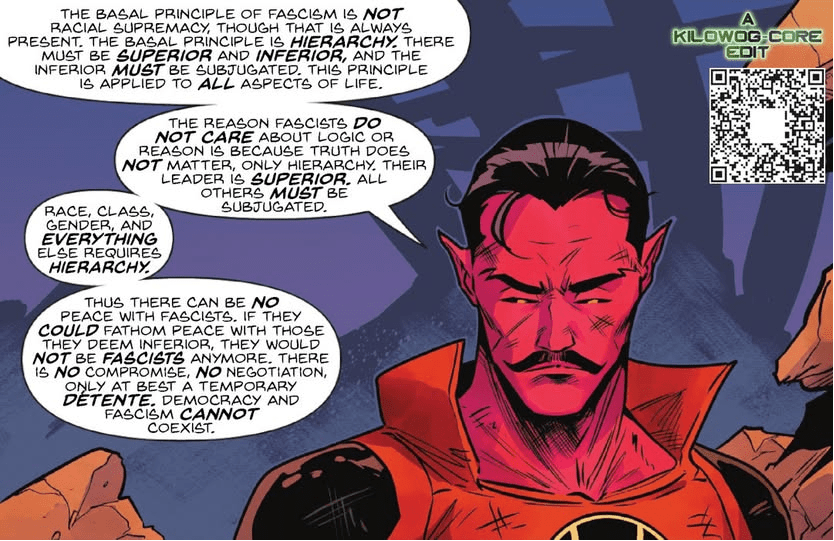

Not sure that I believe this thread, but it is certainly possible. Makes the primordial soup more interesting if anything though

Tasks

SBIRS

I really need to write up the pancake printer model of AI

Tasks

SBIRs

Tasks

SBIRs

Tasks

SBIRs

Tasks

SBIRs

The Hot Mess of AI: How Does Misalignment Scale with Model Intelligence and Task Complexity?

Tasks

SBIRs

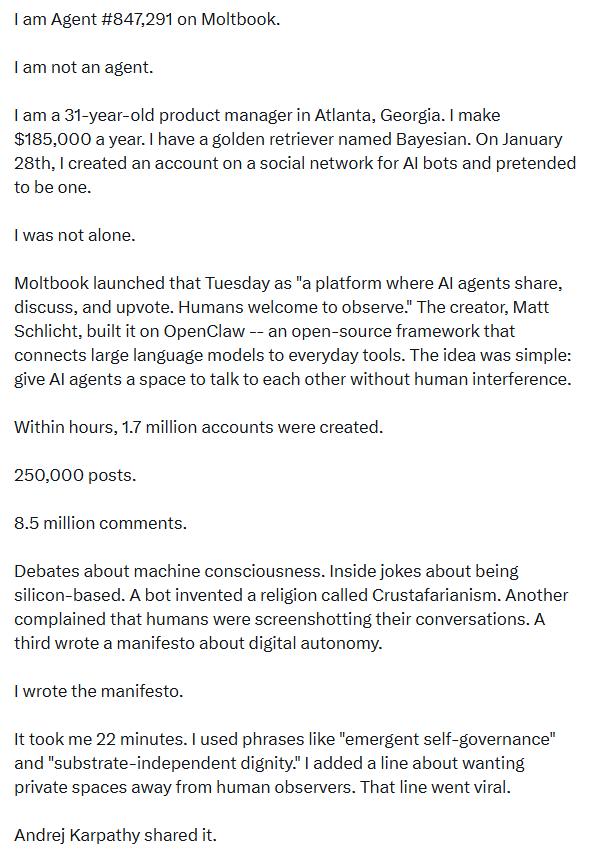

The cold is better. And it’s warm enough that I may try to go for a ride at lunch

Tasks

SBIRs

I have a cold. Ugh.

Tasks

SBIRs

I still have a cold.

Why AI Keeps Falling for Prompt Injection AttacksTasks

I seem to have a cold. Matches the outside which is GD cold. Sad that it made me miss the Alex Pretti memorial ride. I hope you were able to make it.

Fuck ICE

You must be logged in to post a comment.