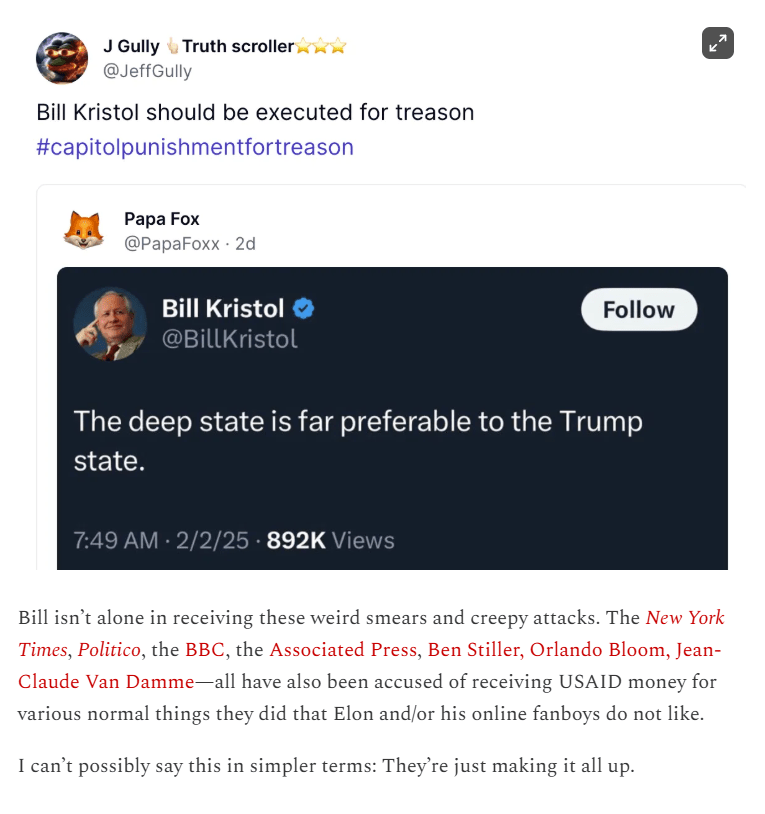

From The Bulwark. Good example of creating a social reality and using it for an organizational lobotomy. Add to the book following Jan 6 section?

Full thread here

There is an interesting blog post (and thread) from Tim Kellogg that says this:

- Context: When an LLM “thinks” at inference time, it puts it’s thoughts inside <think> and </think> XML tags. Once it gets past the end tag the model is taught to change voice into a confident and authoritative tone for the final answer.

- In s1, when the LLM tries to stop thinking with “”, they force it to keep going by replacing it with “Wait”. It’ll then begin to second guess and double check it’s answer. They do this to trim or extend thinking time (trimming is just abruptly inserting “/think>”).

This is the paper: s1: Simple test-time scaling

- Test-time scaling is a promising new approach to language modeling that uses extra test-time compute to improve performance. Recently, OpenAI’s o1 model showed this capability but did not publicly share its methodology, leading to many replication efforts. We seek the simplest approach to achieve test-time scaling and strong reasoning performance. First, we curate a small dataset s1K of 1,000 questions paired with reasoning traces relying on three criteria we validate through ablations: difficulty, diversity, and quality. Second, we develop budget forcing to control test-time compute by forcefully terminating the model’s thinking process or lengthening it by appending “Wait” multiple times to the model’s generation when it tries to end. This can lead the model to double-check its answer, often fixing incorrect reasoning steps. After supervised finetuning the Qwen2.5-32B-Instruct language model on s1K and equipping it with budget forcing, our model s1-32B exceeds o1-preview on competition math questions by up to 27% (MATH and AIME24). Further, scaling s1-32B with budget forcing allows extrapolating beyond its performance without test-time intervention: from 50% to 57% on AIME24. Our model, data, and code are open-source at this https URL

Tasks

- I think I want to try having a chat with an aide: https://www.vanhollen.senate.gov/contact/office-locations

- Guardian – done

- Ping Carlos – done

SBIRs

- 9:00 standup – done

- 10:00 MLOPS whitepaper review

- 12:50 USNA

You must be logged in to post a comment.