SBIRs

- 9:00 Standup – done!

- LM White Paper? This can wait, actually. Ron will need to do the MLOps, and can’t even get started until returns from his BD tasks and trials

GPT Agents

- Continue with paper – done!

- Ping Greg to discuss his comments

SBIRs

GPT Agents

Helene response hampered by misinformation, conspiracy theories

AI-Generated Pro-North Korean TikToks Are Also Bizarre Ads for Supplements

Sample ballot of Baltimore County

Grants

SBIRs

GPT Agents

from tkinter import filedialog

import re

from typing import List, Dict

def load_file_to_list(filename:str) -> List:

print("opening {}".format(filename))

try:

with open(filename, 'r') as file:

lines = file.readlines()

return [line.strip() for line in lines]

except FileNotFoundError:

print("Error: File '{}' not found".format(filename))

return []

def save_list_to_file(l:List, filename:str):

print("opening {}".format(filename))

s:str

try:

with open(filename, 'w') as file:

for s in l:

file.write("{}\n".format(s))

except FileNotFoundError:

print("Error: File '{}' not found".format(filename))

return []

filename = filedialog.askopenfilename(filetypes=(("tex files", "*.tex"),), title="Load tex File")

if filename:

filename2 = filename.replace(".tex", "_mod.tex")

filename3 = filename.replace(".tex", "_mod.bib")

# open the pdf file

l:List = load_file_to_list(filename)

p1 = re.compile(r"\\footnote{\\url{(.*?)}}")

p2 = re.compile(r"https://([^/]*)")

s1:str

s2:str

s3:str

l2 = []

cite_dict = {}

count = 1

for s1 in l: # Get each line in the file

#print(s1)

m1 = p1.findall(s1) # find all the footnote urls

for s2 in m1:

#print("\t{}".format(s2))

m2 = p2.match(s2) # pull out what we'll use for our cite

s3 = m2.group(1).strip('www.')

s3 = "{}_{}".format(s3, count)

#print("\t\t{}".format(s3))

olds = r"\footnote{\url{"+s2+"}}"

news = r"\cite{"+s3+"}"

#print("olds = {}[{}], news = {}".format(olds, s1.find(olds), news))

s1 = s1.replace(olds, news)

cite_dict[s3] = s2

l2.append(s1)

print(s1)

save_list_to_file(l2, filename2) # write the modified text to a new file

l2 = []

for key, val in cite_dict.items():

s = "@misc{"+key+",\n"

s += '\tauthor = "{Last, First}",\n'

s += '\tyear = "2024",\n'

s += '\thowpublished = "\\url{'+val+'}",\n'

s += 'note = "[Online; accessed 07-October-2024]"\n}\n'

print(s)

l2.append(s)

save_list_to_file(l2, filename3) # write the citation text to a .bib file

U.S. Wiretap Systems Targeted in China-Linked Hack

People Are Sharing Fake Hurricane Helene Photos for Profit and Political Gain

Where Facebook’s AI Slop Comes From

An Audacious Plan to Halt the Internet’s Enshittification and Throw It Into Reverse

TPI-LLM: Serving 70B-scale LLMs Efficiently on Low-resource Edge Devices

SBIRs

GPT Agents

Grants

Horny Robot Baby Voice: James Vincent on AI chatbots

SBIRs

GPT Agents

This looks very good, if a bit dated. Deepset/Haystack appear to have continued development. So check out the website first. Build a Search Engine with GPT-3

SBIRs

Grants

GPT Agents

Larger and more instructable language models become less reliable

SBIRs

Grants

GPT Agents

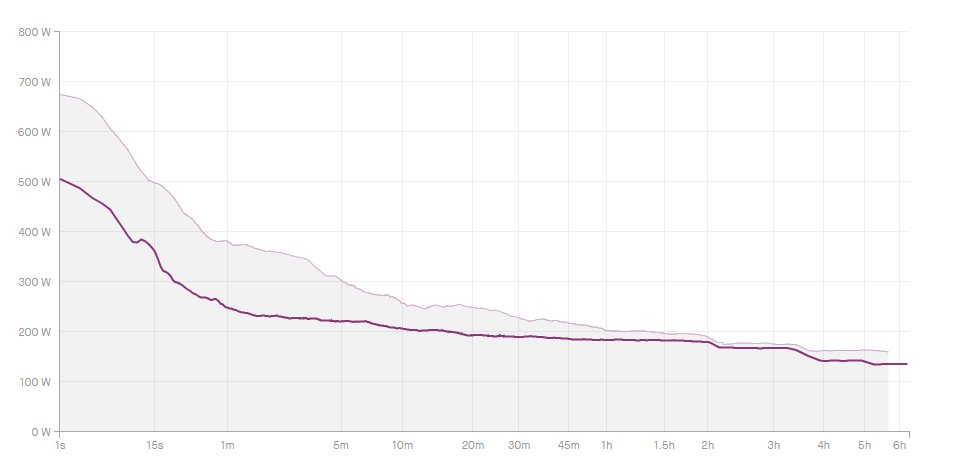

Good ride yesterday. Managed to eke out a 20mph average, but it was hard for the last 30 miles or so. Here’s the power curve:

You can see that there is a nice 200-ish watt output for 2 hours, then another hour+ at a bit below that, and then the last hour at a much lower output. That is exactly the way that I felt. And it’s just like most of my rides this year with those two drops, which is really interesting. Just a bit more extreme, particularly the second drop. Part of that was warding off cramping, which is the problem I’ve been dealing with for the last couple of years, but most of it was that I really didn’t have that much left in my legs and was pretty much limping home.

Grants

That is a lot of rain:

Learned feature representations are biased by complexity, learning order, position, and more

More AI slop:

From https://bsky.app/profile/chrislhayes.bsky.social/post/3l55tbzk5ue2e. He continues: “This is is garbage! It’s worst than useless, it’s misleading! If you looked at it quickly you’d think Babe Ruth and Shohei also both threw left and batted right. Sure this is trivial stuff but the whole point is finding accurate information.“

Chores today

Grants

Really good example of potential sources of AI pollution as it applies to research. Vetting sources may become progressively harder as the AI is able to interpolate across more data. More detail is in the screenshot:

The alt text describes “Screenshot of a Bridgeman Images page, which shows what appears to be a 19th-century photograph of a man in a top hat, but which has appended metadata for a completely different work of art, namely, a late medieval manuscript, which features an image of the author Pierre Bersuire presenting his book to the King of France.“

SBIRs

This paper on “How and why to read, write, and publish academic research” just came across my feeds and it’s fantastic!

SBIRs

I just read this, and all I can think of is that this is exactly the slow speed attack that AI would be so good at. It’s a really simple playbook. Run various versions against all upcoming politicians. Set up bank accounts in their names that pay for all this and work on connecting their real accounts. All it takes is vast, inhuman patience: https://www.politico.com/news/2024/09/23/mark-robinson-porn-sites-00180545

SBIRs

Grants

GPT Agents

Autumnal equinox today. And it’s the last push to move everything into the garage for the basement finishing

Grants

I’d like to write an essay that compares John Wick to Field of Dreams as an example of what AI can reasonably be expected to be able to produce and what it will probably always struggle with.

This weekend is the last push to move everything into the garage for the basement finishing

Grants

You must be logged in to post a comment.