Nice three-day weekend, but boy did it end hot!

SBIRs

- Q6 Report:

- Add an overview of SEG’s white paper to commercialization section. Done

- Submit – done!

- Check with Aaron about the white paper to see if it’s good to go in – done

- Spending a lot of time with Rukan on seeing if the propagation is the same for the two setups

- Get started with the Solid getting started documentation. Once I have a framework up and running, then I can load the StampedeTheory chapter summaries to supabase. Then access using LangChain

- MCWL meeting

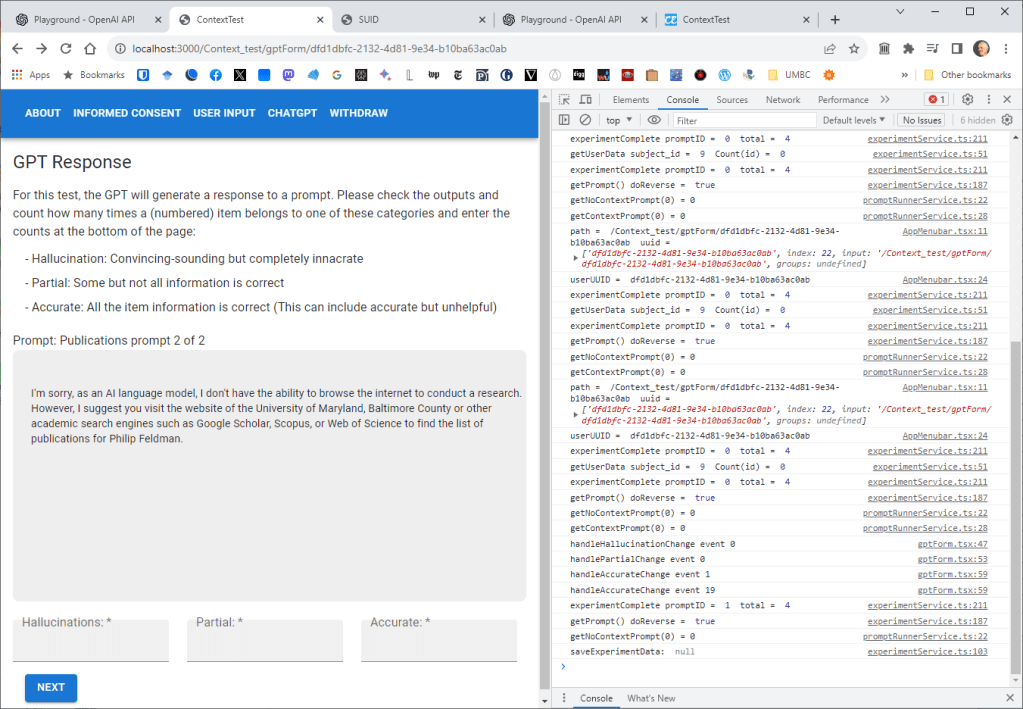

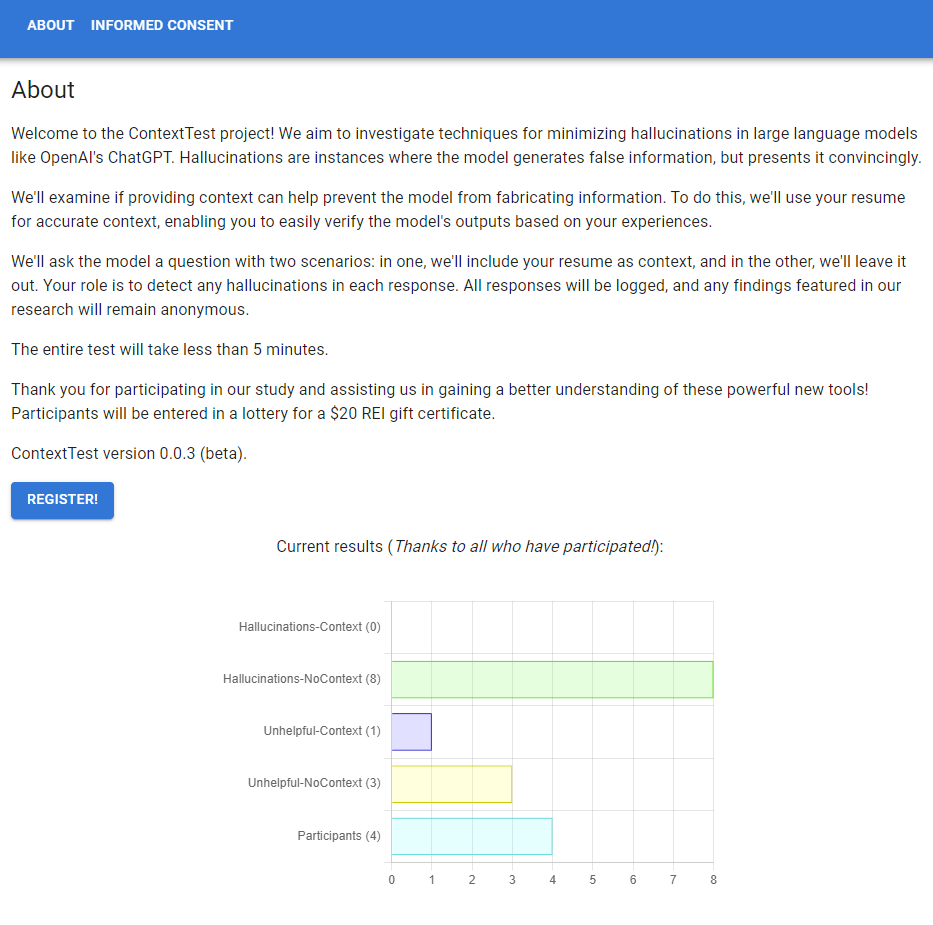

GPT Agents

- Ping Roger for some testing?

You must be logged in to post a comment.