I have papers to review by November 17

Speaking of reviews: Prometheus: Inducing Fine-grained Evaluation Capability in Language Models

- Recently, using a powerful proprietary Large Language Model (LLM) (e.g., GPT-4) as an evaluator for long-form responses has become the de facto standard. However, for practitioners with large-scale evaluation tasks and custom criteria in consideration (e.g., child-readability), using proprietary LLMs as an evaluator is unreliable due to the closed-source nature, uncontrolled versioning, and prohibitive costs. In this work, we propose Prometheus, a fully open-source LLM that is on par with GPT-4’s evaluation capabilities when the appropriate reference materials (reference answer, score rubric) are accompanied. We first construct the Feedback Collection, a new dataset that consists of 1K fine-grained score rubrics, 20K instructions, and 100K responses and language feedback generated by GPT-4. Using the Feedback Collection, we train Prometheus, a 13B evaluator LLM that can assess any given long-form text based on customized score rubric provided by the user. Experimental results show that Prometheus scores a Pearson correlation of 0.897 with human evaluators when evaluating with 45 customized score rubrics, which is on par with GPT-4 (0.882), and greatly outperforms ChatGPT (0.392). Furthermore, measuring correlation with GPT-4 with 1222 customized score rubrics across four benchmarks (MT Bench, Vicuna Bench, Feedback Bench, Flask Eval) shows similar trends, bolstering Prometheus’s capability as an evaluator LLM. Lastly, Prometheus achieves the highest accuracy on two human preference benchmarks (HHH Alignment & MT Bench Human Judgment) compared to open-sourced reward models explicitly trained on human preference datasets, highlighting its potential as an universal reward model. We open-source our code, dataset, and model at this https URL.

GPT Agents

- Had a good discussion with Jimmy and Shimei yesterday about bias and the chess model. In chess, white always moves first. That’s bias. Trying to get the model to get black to move first is hard and maybe impossible. That, and other chess moves that are more common and less so might be a good way to evaluate how successful treating bias in a model could be, without destroying them.

- My personal thought is that there may need to be either “mapping functions” that are attached to the prompt vector that steer the machine in certain ways, or even entire models who’s purpose is to detect and mitigate bias.

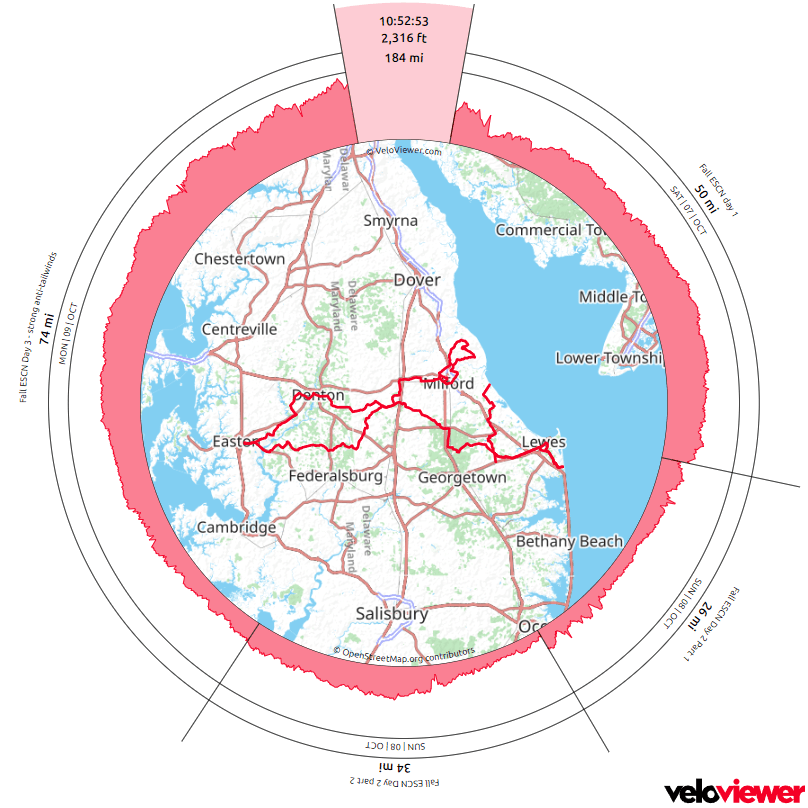

- Started on getting ecco data to build maps. I need to install the project so that it can be edited, since I’m going to have to tweak. Here’s how: pip.pypa.io/en/latest/topics/local-project-installs

SBIRs

- Need to add references to figures in the white paper – done

- 10:30 IPT meeting – just getting ducks in a row

You must be logged in to post a comment.