Need to set up my new reviews on Easy Chair. Done! Now I need to read the things!

Leaked Files from Putin’s Troll Factory: How Russia Manipulated European Elections

- Leaked internal documents from a Kremlin-controlled propaganda center reveal how a well-coordinated Russian campaign supported far-right parties in the European Parliament elections — and planted disinformation across social media platforms to undermine Ukraine.

LLMs Will Always Hallucinate, and We Need to Live With This

- As Large Language Models become more ubiquitous across domains, it becomes important to examine their inherent limitations critically. This work argues that hallucinations in language models are not just occasional errors but an inevitable feature of these systems. We demonstrate that hallucinations stem from the fundamental mathematical and logical structure of LLMs. It is, therefore, impossible to eliminate them through architectural improvements, dataset enhancements, or fact-checking mechanisms. Our analysis draws on computational theory and Godel’s First Incompleteness Theorem, which references the undecidability of problems like the Halting, Emptiness, and Acceptance Problems. We demonstrate that every stage of the LLM process-from training data compilation to fact retrieval, intent classification, and text generation-will have a non-zero probability of producing hallucinations. This work introduces the concept of Structural Hallucination as an intrinsic nature of these systems. By establishing the mathematical certainty of hallucinations, we challenge the prevailing notion that they can be fully mitigated.

SBIRs

- 9:00 Standup

- 10:00 VRGL/GRL meeting

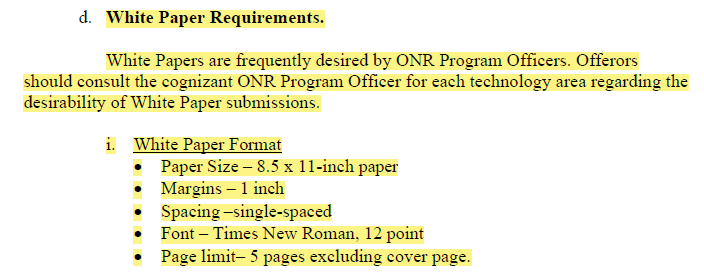

- 11:30 White paper fixes. So I’ve found some conflicts between various ONR whitepaper requests. Here’s the one that I’ve been working to. Notice that there is no mention of paper structure other than the cover page. This concerns me because I have a page of references:

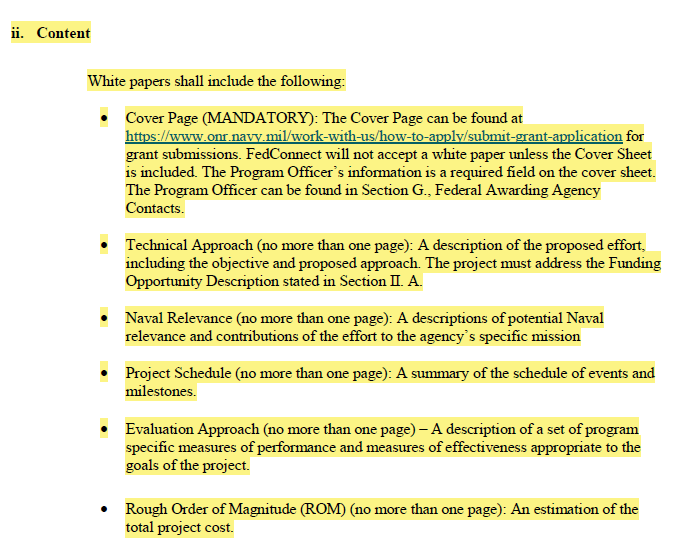

- Now, in a previous ONR request, there is much more specificity:

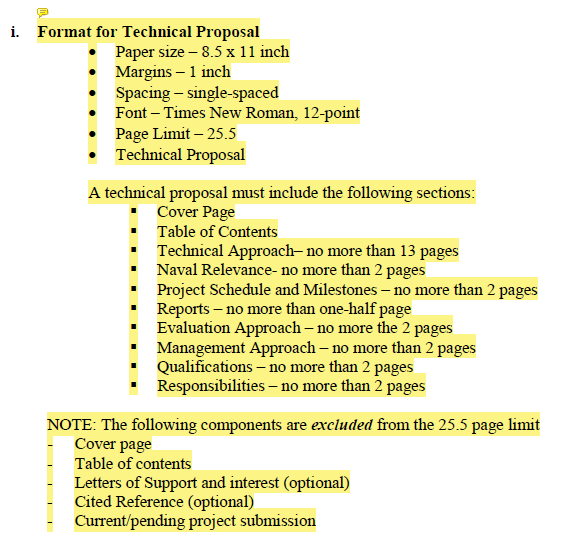

- Note that there is still no mention if references are counted as part of the white paper. To get some insight on that, you have to look at the Format for Technical Proposal in the second document:

- At this point we are two degrees of separation from the original call (Different request, which is a FOA, rather than a BAA, and a technical proposal rather than a white paper).

- Regardless, I’m leaning towards reformatting the paper to be more in line with the FOA, following its structure and assuming the references don’t count.

- I’d keep the finalized version of this white paper in case they come back with a request for a 5-page paper including references, but do a more FOA-compliant version that shares a lot of the content.

- 2:30 AI ethics. Approved a project!

GPT Agents

- Work on Background section. Some good, slightly half-assed progress

You must be logged in to post a comment.