At approximately 5:30 this morning, my trusty De’Longhi espresso machine passed away trying to make… one… last… cup. That machine has made thousands of espressos, and was one of my pillars of support during COVID.

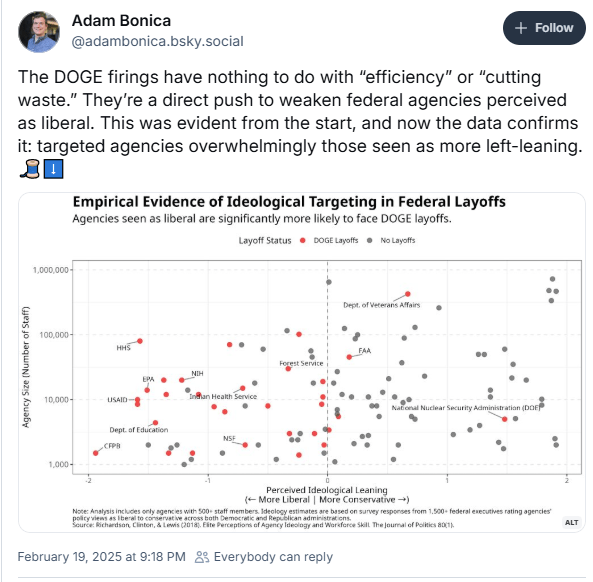

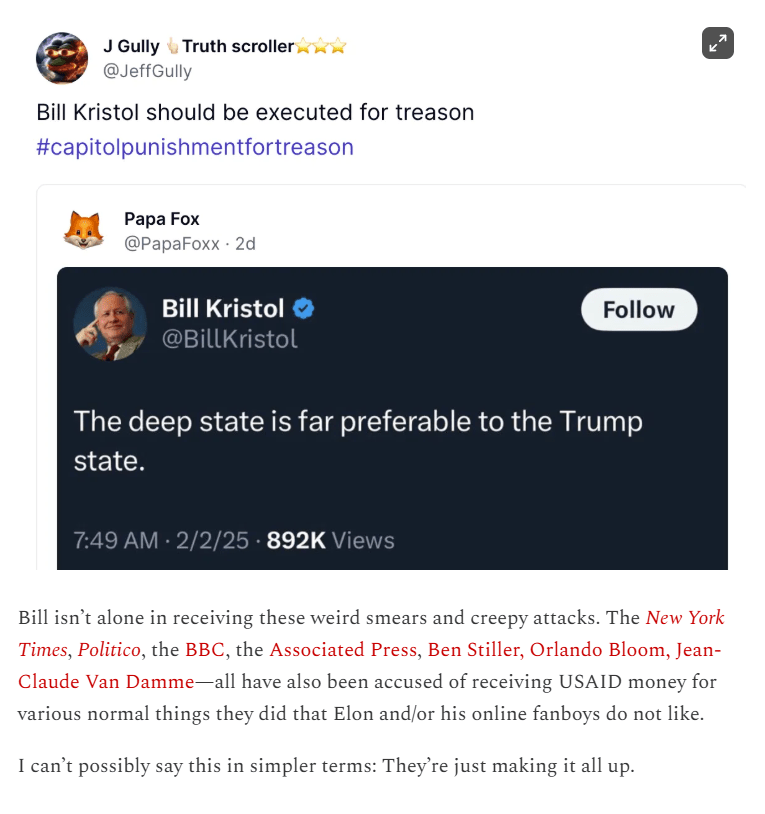

Good thread on targeted attacks

Trump Dismantles Government Fight Against Foreign Influence Operations

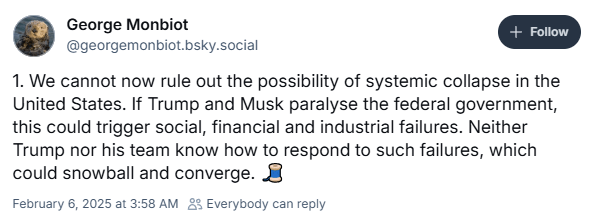

- Experts are alarmed that the cuts could leave the United States defenseless against covert foreign influence operations and embolden foreign adversaries seeking to disrupt democratic governments.

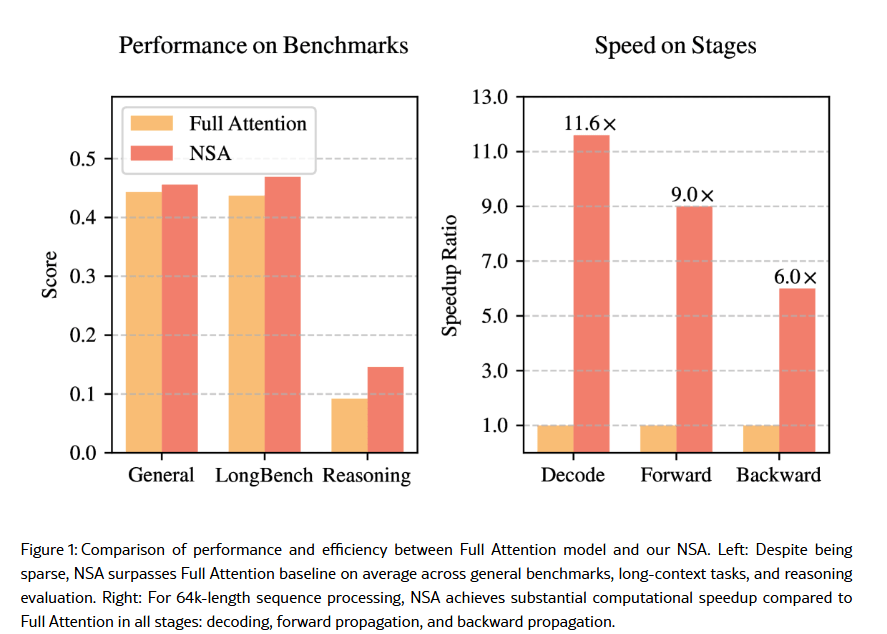

GPT Agents

- More slides and conclusions on KA. I found a nice set of slides in INCAS here

- Reach out to talk to Brian Ketler to interview for the book – done

- Add something to the introduction that describes the difference between “weaponization” (e.g. 9/11) and “weapons-grade” (e.g. Precision Guided Munitions) – added a TODO

SBIRs

- 9:00 standup

- Now that I think I fixed my angle sign bug, back to getting the demo to work – whoops, can’t get all the mapping to work because the intersection calculations happen in an offset coordinate frame that’s different. Wound up just finding the index for the closest coordinate on the curve and using that. Good enough for the demo.

- 12:50 USNA – Meh. These guys have no long term memory

- 4:30 Book club 0 cancelled for this week

You must be logged in to post a comment.