- We’ve seen significant gains from applying these best practices and adopting our canonical tools whenever possible, and we hope that this guide, along with the prompt optimizer tool we’ve built, will serve as a launchpad for your use of GPT-5. But, as always, remember that prompting is not a one-size-fits-all exercise – we encourage you to run experiments and iterate on the foundation offered here to find the best solution for your problem.

Author Archives: pgfeldman

Phil 8.29.2025

Contrastive Representations for Temporal Reasoning

- In classical AI, perception relies on learning spatial representations, while planning—temporal reasoning over action sequences—is typically achieved through search. We study whether such reasoning can instead emerge from representations that capture both spatial and temporal structure. We show that standard temporal contrastive learning, despite its popularity, often fails to capture temporal structure, due to reliance on spurious features. To address this, we introduce Contrastive Representations for Temporal Reasoning (CRTR), a method that uses a negative sampling scheme to provably remove these spurious features and facilitate temporal reasoning. CRTR achieves strong results on domains with complex temporal structure, such as Sokoban and Rubik’s Cube. In particular, for the Rubik’s Cube, CRTR learns representations that generalize across all initial states and allow solving the puzzle much faster than BestFS—though with longer solutions. To our knowledge, this is the first demonstration of efficiently solving arbitrary Cube states using only learned representations, without hand-crafted search heuristics.

Tasks

- 2FA – done

- Bills – done

- Chores – done

- Dishes – done

- Water plants – done

- 11:30 Atwaters – done

- 3:30 – to DC – well, kind of. I was running late, but had the bike all loaded up and was just about to get on to I95 when I realized that I’d forgotten my helmet. Turned around and went home

Phil 8.28.2025

Setting up the COST proposal project (CA24150 – participation instructions). Need to reach out to a lot of people to build the team.

Send note to No Starch about the P&P. 80 tickets at $15.00-ish for a Tuesday evening event. Done

- For Generation Z, born between 1997 and 2012, social media – especially YouTube, TikTok, Instagram and Snapchat – has become their source of information about the world, eclipsing traditional news outlets. In a survey of more than 1,000 young people ages 13 to 18, 8 in 10 said they encounter conspiracy theories in their social media feeds each week, yet only 39% reported receiving instruction in evaluating the claims they saw there.

- We narrowed the focus of our program to skills essential to being an informed citizen, such as “lateral reading” − that is, using the full context of the internet to judge the quality of a claim, identify the people or organizations behind it and assess their credibility. Rather than fixate solely on the message, we taught students to vet the messenger: What organizations stand behind the claim? Does the source of the claim have a conflict of interest? What are the source’s credentials or expertise?

SBIRs

- 9:00 standup – done

- Put in a bunch of text about MORS WG30 activities at their annual symposium in the BP. Need to add a bit more just to mention the typical number of sessions at these events – done. Seems good enough to send along

- 4:00 SEG meeting quick. Everything is on schedule

- Did laptop return things

Phil 8.27.2025

[2506.02153v1] Small Language Models are the Future of Agentic AI

- Large language models (LLMs) are often praised for exhibiting near-human performance on a wide range of tasks and valued for their ability to hold a general conversation. The rise of agentic AI systems is, however, ushering in a mass of applications in which language models perform a small number of specialized tasks repetitively and with little variation.

Here we lay out the position that small language models (SLMs) are sufficiently powerful, inherently more suitable, and necessarily more economical for many invocations in agentic systems, and are therefore the future of agentic AI. Our argumentation is grounded in the current level of capabilities exhibited by SLMs, the common architectures of agentic systems, and the economy of LM deployment. We further argue that in situations where general-purpose conversational abilities are essential, heterogeneous agentic systems (i.e., agents invoking multiple different models) are the natural choice. We discuss the potential barriers for the adoption of SLMs in agentic systems and outline a general LLM-to-SLM agent conversion algorithm.

Our position, formulated as a value statement, highlights the significance of the operational and economic impact even a partial shift from LLMs to SLMs is to have on the AI agent industry. We aim to stimulate the discussion on the effective use of AI resources and hope to advance the efforts to lower the costs of AI of the present day. Calling for both contributions to and critique of our position, we commit to publishing all such correspondence at this https URL.

Getting back into the academic proposal swing. Seeing what’s available

SBIRs

- 10:00 Emerson meeting

- 4:00 ADS tagup

Phil 8.26.2025

From https://bsky.app/profile/segyges.bsky.social/post/3lxclvdliks2p

npj Complexity is an open access, international, peer-reviewed journal dedicated to publishing the highest quality research on complex systems and their emergent behavior at multiple scales.

Tasks

- Profs and Pints tonight! Get there 45 minutes early and bring the laptop

- Start updating the calls section on the Cognitive Commons template. See what makes sense for the first submission

- New bushes today?

SBIRs

- 9:00 Sprint planning. Done

- Start on Q2 report. Started. The changed the template to the revised (and as yet not approved in writing) SOW, and created a Q2_text folder with the blanks

- Reworking the tasking – done

Phil 8.25.2025

Had an annoying morning trying to get VFS to work properly

Tasks

- Slides and timings for P&P talk – done? War Room takes 8 minutes to read, so I have about 22 minutes to talk. Good discussion with Jimmy to work out final details

- Dead shrubs? Shrubs are gone, but replacements are not in yet

- Powerwashing quote? Soon

SBIRs

- 9:00 Sprint demos – done

- 12:30 Survey review – I dunno.

- 3:00 Sprint planning – two stories: quarterly status report and the AW proposal ROI section – delayed

Phil 8.22.2025

September is closing in fast

Tasks

- Read this page on associationism

- Send Vanessa new vignette – done

- Bills – done

- Chores – done

- Mow – done

- Weed – done

- Laundry – mostly done

- Vacuum and organize shop – done for now

- Dishes – done

- Research storage

- Metal to Aaron – done

- Read through stories for P&P and time them – first pass and editing

SBIRs

- Write up notes from yesterday and see when the next report is due. It will have the new tasks and numbering – Done

Phil 8.21.2025

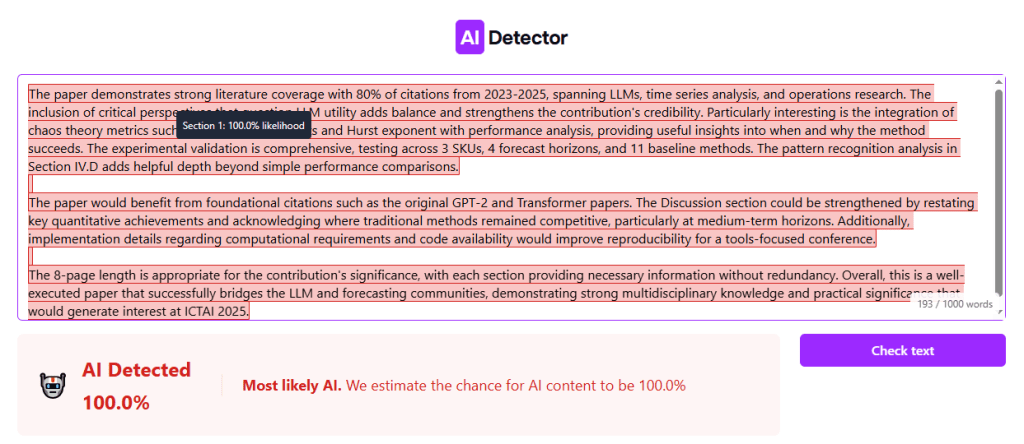

Saw my first AI review today. Pretty sad:

Tasks

- Start proposal 14 review – done! I HAVE NO MORE REVIEWS TO DO!

- Submit No Starch Press proposal – DONE! Need to look for other possible publishers though. Still, it’s nice to get this far.

- Send Vanessa new vignette

- Research storage

SBIRs

- 9:00 standup. See if Ron needs some tag-teaming – not yet

- 4:00 SEG meeting – need to write up the notes. Basically, Ron and John are going to try and get interprocess communication going.

- Work on the A3IW proposal – finished! Well a first pass anyway. I thing a “potential market” section might help

- Circle around with Aaron to see if we should really write a WH/AI proposal – pinged

Phil 8.20.2025

WtaF? ‘I Want to Try and Get to Heaven’: Trump Gets Reflective on ‘Fox & Friends’

Tasks

- There is some back and forth on the review of proposal 7. It may just be a no-cost extension, which is an easy “yes.” Ok, got some direction. I need to rewrite and submit – done

- Submit proposal 13 review – done

- Start proposal 14 review – tomorrow

- Work on No Starch Press proposal – done. I’ll send it in tomorrow

- Roll in KA edits – done!

- Research storage

SBIRs

- Nothing on the schedule today. See if Ron can get results to share on Thursday – not really. He didn’t look up solutions and wrote himself into a corner. Going to let him work himself out of it. He really needs to stop doing this

- Work on the A3IW proposal – nope

- Circle around with Aaron to see if we should really write a WH/AI proposal – pinged

Phil 8.19.2025

Pinged Carlos back.

No Starch Press pinged back! I need to put together a proposal after finishing the reviews

Tasks

- Review proposal 13 – done. May need to revisit 7, but I’m confused for now

- Kyle today? Yup, the torch, the brake, and the endmill are gone. The lathe is going to take some more works – it’s a bit over 1,000 lbs

SBIRs

- 9:00 standup

- Work with Aaron on the white paper – started. It’s going to be more of a business proposal. I think I’m going to run it through Gemini to see if it can come up with a good framework because frankly, I just don’t want to do any more BD that won’t go anywhere.

Phil 8.18.2025

President Trump’s War on “Woke AI” Is a Civil Liberties Nightmare | Electronic Frontier Foundation

- The White House’s recently-unveiled “AI Action Plan” wages war on so-called “woke AI”—including large language models (LLMs) that provide information inconsistent with the administration’s views on climate change, gender, and other issues. It also targets measures designed to mitigate the generation of racial and gender biased content and even hate speech. The reproduction of this bias is a pernicious problem that AI developers have struggled to solve for over a decade.

- A new executive order called “Preventing Woke AI in the Federal Government,” released alongside the AI Action Plan, seeks to strong-arm AI companies into modifying their models to conform with the Trump Administration’s ideological agenda.

Russia is quietly churning out fake content posing as US news – POLITICO

- According to misinformation tracker NewsGuard, the campaign — which has been tracked by Microsoft’s Threat Analysis Center as Storm-1679 since at least 2022 — takes advantage of high-profile events to pump out fabricated content from various publications, including ABC News, BBC and most recently POLITICO.

- “They are just throwing spaghetti, trying to see what’s going to stick on a wall,” said Ivana Stradner, a researcher on Russia at the Foundation for Defense of Democracies, a Washington think tank.

Build a Small Language Model (SLM) From Scratch

Tasks

- Write review for proposal 7 – done. That was a lift.

- Machine shop pickup? Tomorrow

- Work on rolling in edits. Finished the War Room story

- Put together a proposal for No Starch Press

SBIRS

- Work with Ron a bit?

- Overleaf doc A3IW

Phil 8.17.2025

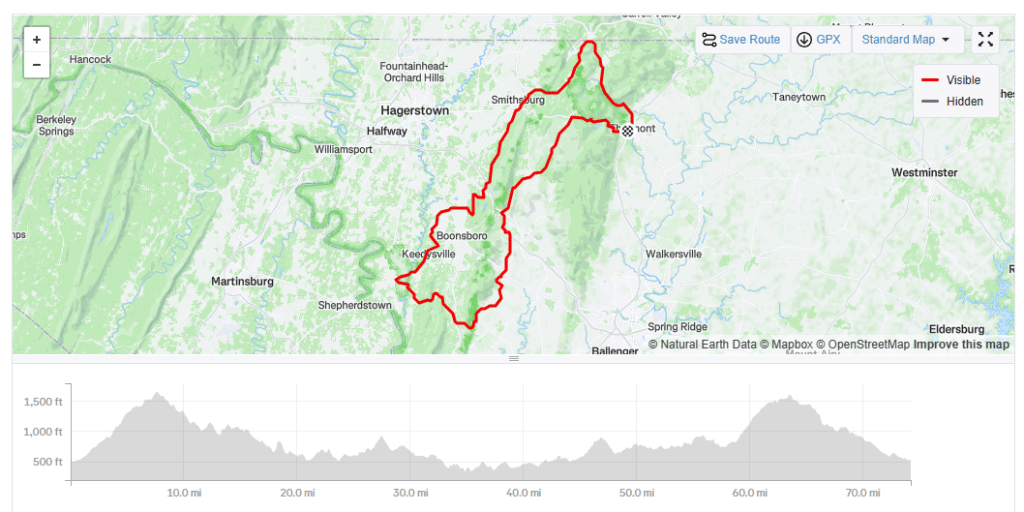

Great ride yesterday, even if we did get rained on in the middle

Tasks

- Switch UPS – done

- Bills – done

- Chores – done

- Dishes – done

- Schedule power wash – started

- Read Proposal 14 – done

- Ping Nathan – done

- Wash truck – done. Shiny!

- Picked up the recumbent

- Put Peter Turchin in P33 somewhere. Maybe simulation?

- Ping Carlos to see if there will be a recording of Trust in human-technology teams: from decision-support to Generative AI – done

Phil 8.15.2025

Tasks

- Switch UPS

- Bills – done

- Chores

- Dishes

- Weed

- Mow

- Schedule power wash

- Read Proposal 14

- is a complexity scientist who works in the field of historical social science that he and his colleagues call: Cliodynamics

- His research interests lie at the intersection of social and cultural evolution, historical macrosociology, economic history, mathematical modeling of long-term social processes, and the construction and analysis of historical databases.

- How do human societies evolve? Why do we see such a staggering degree of inequality in effectiveness of governance and economic performance among nations?

- Currently he investigates a set of broad and interrelated questions: In particular, what processes explain the evolution of ultrasociality—our capacity to cooperate in huge anonymous societies of millions?

- Peter’s main research effort at the moment is directed at coordinating the Seshat Databank —a massive historical database of cultural evolution that is gathering and systematically organizing the vast amount of knowledge about past human societies, held collectively by thousands of historians and archaeologists.

Phil8.14.2025

[2507.21206] Agentic Web: Weaving the Next Web with AI Agents

- The emergence of AI agents powered by large language models (LLMs) marks a pivotal shift toward the Agentic Web, a new phase of the internet defined by autonomous, goal-driven interactions. In this paradigm, agents interact directly with one another to plan, coordinate, and execute complex tasks on behalf of users. This transition from human-driven to machine-to-machine interaction allows intent to be delegated, relieving users from routine digital operations and enabling a more interactive, automated web experience. In this paper, we present a structured framework for understanding and building the Agentic Web. We trace its evolution from the PC and Mobile Web eras and identify the core technological foundations that support this shift. Central to our framework is a conceptual model consisting of three key dimensions: intelligence, interaction, and economics. These dimensions collectively enable the capabilities of AI agents, such as retrieval, recommendation, planning, and collaboration. We analyze the architectural and infrastructural challenges involved in creating scalable agentic systems, including communication protocols, orchestration strategies, and emerging paradigms such as the Agent Attention Economy. We conclude by discussing the potential applications, societal risks, and governance issues posed by agentic systems, and outline research directions for developing open, secure, and intelligent ecosystems shaped by both human intent and autonomous agent behavior. A continuously updated collection of relevant studies for agentic web is available at: this https URL.

Tasks

- Finish reading proposal 13 (Done! Better than 7. Much better detail) and read 14 before writing anything

- Remove lines from under the deck

- Start making a list of agents (Nomad Century, Gutenberg, Sentient Cell, Bomber Mafia, etc.)

- 10:30 and 3:00 for shop pickup – everything ran late, but the saw, the welder, the grinders, and a shop vac are gone

SBIRs

- 9:00 Standup – done

- Work with Ron on socket code – done! Works!

- FedEx shipment today maybe? Managed to change the delivery options. They still didn’t leave it

- 4:00 SEG meeting – skipped for garage-emptying

Phil 8.13.2025

I need husband: AI beauty standards, fascism and the proliferation of bot driven content

- Generative AI is proliferating on social media at an alarming rate. Images are generated and disseminated with political agendas, particularly in right-wing spheres. These AI-generated images often depict soldiers, sad children, or interior designs. Of particular note are the catfishing-style “I need husband” posts featuring women with impossible proportions, ostensibly seeking partners. These chimeric creations are bot-driven posts designed to farm engagement, but they also hint at something more sinister. These posts reflect a mechanical view of the male gaze. However, an AI cannot truly comprehend the male gaze, and in its attempt to mimic it, it creates beings beyond understanding. This research aims to analyze the patterns in these images, explore posting methods and engagement, and examine the meaning behind the images. It culminates in an artistic piece in progress critiquing both the images and their creation and dissemination methods. By rendering these AI-generated images as classical Greek statues through Gaussian splatting and 3D printing, I aim to create a visual commentary on the intersection of AI, the male gaze and fascism. This artistic approach not only highlights the absurdity of these digital constructs but also invites viewers to critically examine AI’s role in shaping contemporary perceptions of beauty and gender roles.

- Over the past week, from 4 to 10 August, the Russian military deployed more than 1,000 aerial bombs and nearly 1,400 kamikaze drones against Ukraine. The current record is 728 drones and 13 missiles sent in a single night in July, most directed at the western city of Lutsk. By autumn, German experts predict Moscow could send 2,000 drones a day.

- Ukrainian manufacturers have been working on a solution, too: a cheap, scalable interceptor drone that can knock out incoming Shaheds. Last month Zelenskyy toured a factory where they are being made. “A clear task has been set for the manufacturers: Ukraine must be capable of deploying at least 1,000 interceptors per day within a defined timeframe,” he told engineers and officials, saying they “protected lives”.

- My thoughts on where this is going from 2023

Tasks

- Read proposals 13 and 14 before writing anything

- Remove lines from under the deck

- Start making a list of agents (Nomad Century, Gutenberg, Sentient Cell, Bomber Mafia, etc.)

SBIRs

- Laptop crap

GPT Agents

- 2:30 meeting

- Talk about all the AI in the papers I reviewed, and how most of it was good, with one “slop” paper. What we need in many cases is just an AI slop detector, and there are particular patterns in slop – local repetition, drift, etc. Maybe trajectories over sentence-level embeddings?

- Also, how the bot invasion of social media and the robot war for Ukraine are distorted reflections of each other/

You must be logged in to post a comment.