Add this to the section on soft totalitarianism:

Moloch’s Bargain: Emergent Misalignment When LLMs Compete for Audiences

- Large language models (LLMs) are increasingly shaping how information is created and disseminated, from companies using them to craft persuasive advertisements, to election campaigns optimizing messaging to gain votes, to social media influencers boosting engagement. These settings are inherently competitive, with sellers, candidates, and influencers vying for audience approval, yet it remains poorly understood how competitive feedback loops influence LLM behavior. We show that optimizing LLMs for competitive success can inadvertently drive misalignment. Using simulated environments across these scenarios, we find that, 6.3% increase in sales is accompanied by a 14.0% rise in deceptive marketing; in elections, a 4.9% gain in vote share coincides with 22.3% more disinformation and 12.5% more populist rhetoric; and on social media, a 7.5% engagement boost comes with 188.6% more disinformation and a 16.3% increase in promotion of harmful behaviors. We call this phenomenon Moloch’s Bargain for AI–competitive success achieved at the cost of alignment. These misaligned behaviors emerge even when models are explicitly instructed to remain truthful and grounded, revealing the fragility of current alignment safeguards. Our findings highlight how market-driven optimization pressures can systematically erode alignment, creating a race to the bottom, and suggest that safe deployment of AI systems will require stronger governance and carefully designed incentives to prevent competitive dynamics from undermining societal trust.

And LLMS are absolutely mimicking the human pull towards particular attractors

Can Large Language Models Develop Gambling Addiction?

- This study explores whether large language models can exhibit behavioral patterns similar to human gambling addictions. As LLMs are increasingly utilized in financial decision-making domains such as asset management and commodity trading, understanding their potential for pathological decision-making has gained practical significance. We systematically analyze LLM decision-making at cognitive-behavioral and neural levels based on human gambling addiction research. In slot machine experiments, we identified cognitive features of human gambling addiction, such as illusion of control, gambler’s fallacy, and loss chasing. When given the freedom to determine their own target amounts and betting sizes, bankruptcy rates rose substantially alongside increased irrational behavior, demonstrating that greater autonomy amplifies risk-taking tendencies. Through neural circuit analysis using a Sparse Autoencoder, we confirmed that model behavior is controlled by abstract decision-making features related to risky and safe behaviors, not merely by prompts. These findings suggest LLMs can internalize human-like cognitive biases and decision-making mechanisms beyond simply mimicking training data patterns, emphasizing the importance of AI safety design in financial applications.

Tasks

- Water plants – done

- Bills – done

- Fix raised beds – done

- Drain RV tanks and schedule service – tomorrow

- Chores – done

- Dishes – done

- Order Cycliq – done

- For P33, add a TODO to talk about this, and add the quote: “Yet two rather different peoples may be distinguished, a stratified and an organic people. If the people is conceived of as diverse and stratified, then the state’s main role is to mediate and conciliate among competing interest groups. This will tend to compromise differences, not try to eliminate or cleanse them. The stratified people came to dominate the Northwest of Europe. Yet if the people is conceived of as organic, as one and indivisible, as ethnic, then its purity may be maintained by the suppression of deviant minorities, and this may lead to cleansing.”

LLM stuff

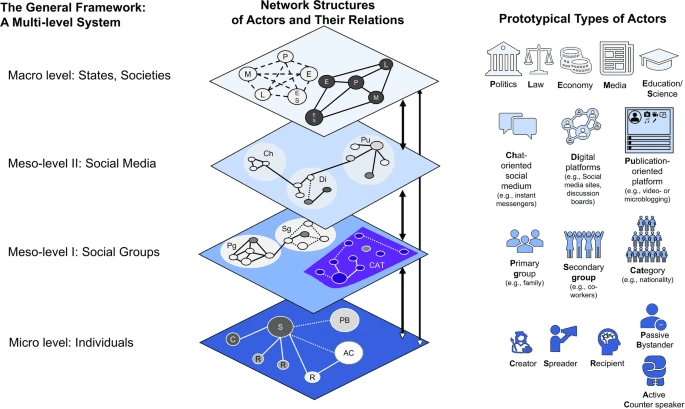

- Outline the CACM section, include a bit of the Moloch article to show how attractors emerge under competitive pressures – sometime over the next few days while it’s raining

- Put together a rough BP for WHAI that has support for individuals, groups (e.g. families), corporate and government. Note that as LLMs compete more for market share, they will naturally become more dangerous, in addition to scambots and AI social weapons. Had a good chat with Aaron about this. Might pick it up Monday

You must be logged in to post a comment.