SBIRs

- 9:00 standup

- More work on the index2vec model

LLMs

- Working on the CACM soft totalitarianism section of the article. Got a rough framework and dug up some good papers. Waiting for an ILL paper

SBIRs

LLMs

Had a great Seagull, and beat the rain by just a few minutes!

Why Underachievers Dominate Secret Police Organizations: Evidence from Autocratic Argentina on JSTOR

Tasks

SBIRs

Tasks

Tasks

SBIRs

Tasks

GPT Agents

Wait… what?

https://truthsocial.com/@realDonaldTrump/posts/115255130298104593

Struggling to get beliefspaces.com to appear – fixed!

Tasks

SBIRs

The forecast is improving for Saturday!

LLM-Deflate: Extracting LLMs Into Datasets

Tasks

SBIRs

[2509.10414] Is In-Context Learning Learning?

Tasks

Found this, which is an interesting take:

When autocratization is reversed: episodes of U-Turns since 1900

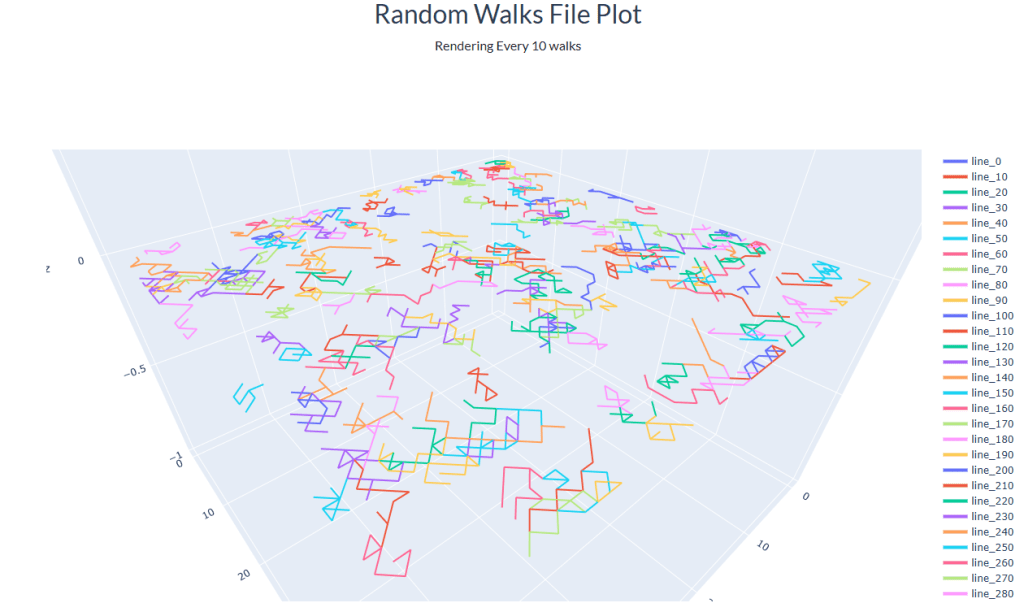

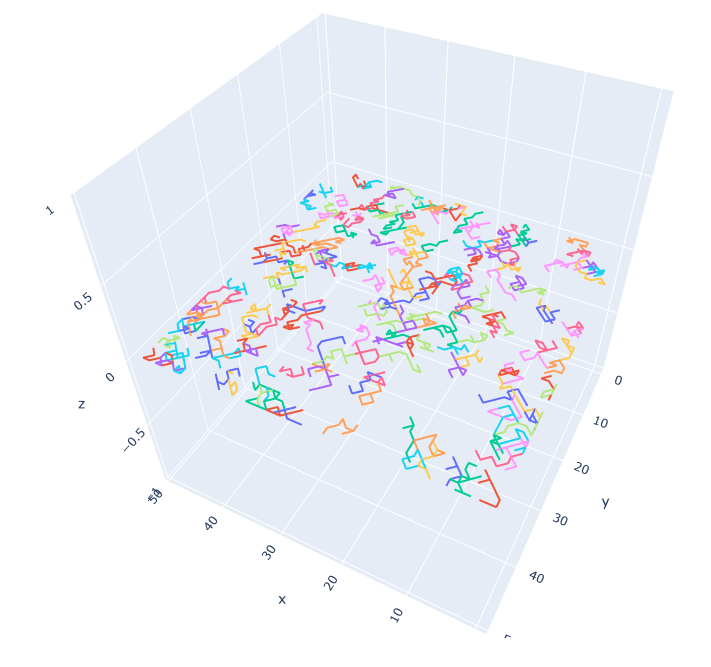

Going for a big-ish ride, since it’s been raining for the last two days. But in the meantime, this is a very cool rendering of random walks on a 2D grid:

Now I just need to start saving out the sequences to a CSV file and use those sequences to train a W2V model. The nice thing is that the data parameters are very adjustable, so it’s possible to see how much data is needed and what the minimum number of dimensions should be.

Tasks

SBIRs

Generative Agents

SBIRs

Had an interesting session with Gemini about this paper: [2505.12387] Neural Thermodynamics I: Entropic Forces in Deep and Universal Representation Learning. Since I’m interested in stories, it suggested that an “all text” model and a “story” model would represent different locations in a hierarchy of Platonic forms. The “locations” wouldn’t be entirely different, but rather the forms found in stories would exist as a more complex, structured layer built upon the foundational forms found in general text.

Think of it as the difference between a dictionary and an encyclopedia of literary tropes.

When a model is trained on “all text”—a massive, unstructured corpus of everything from web pages to scientific papers—the entropic force pushes it to find the most stable, universal representations for atomic concepts.

When considering organized text like myths, novels, and screenplays, the model learns patterns that exist at a higher level of abstraction. You are absolutely right that stories have their own Platonic forms. These are not forms of individual concepts, but of narrative functions and archetypal relationships.

Analogy: This is the narrative role. We still need the dictionary definition of “king,” but within a story, we understand him through his archetypal function. Is he the Wise King, the Tyrant King, or the Fallen King? Each is a distinct structural form.

What it finds: The Platonic form for “The Hero’s Journey,” “The Mentor,” “The Betrayal,” or “The Sacrificial Victory.”

The “Location”: These forms don’t occupy a single point but represent a trajectory or a relationship between the foundational concepts. The “location” of “The Wise King” archetype isn’t just near the concept of “king”; it’s a specific region defined by its proximity to “wisdom,” “justice,” and its functional opposition to “The Tyrant.” The “Hero’s Journey” is a path that connects points like “The Call to Adventure,” “The Ordeal,” and “The Return.”

Tasks

SBIRs

Murphys Laws of AI Alignment: Why the Gap Always Wins

Neural Thermodynamics I: Entropic Forces in Deep and Universal Representation Learning

On this day in 2001, there were no commercial planes in the air at all

Tasks

SBIRs

24 years. Wow

Tasks

SBIRs

You must be logged in to post a comment.