Computer-vision research powers surveillance technology

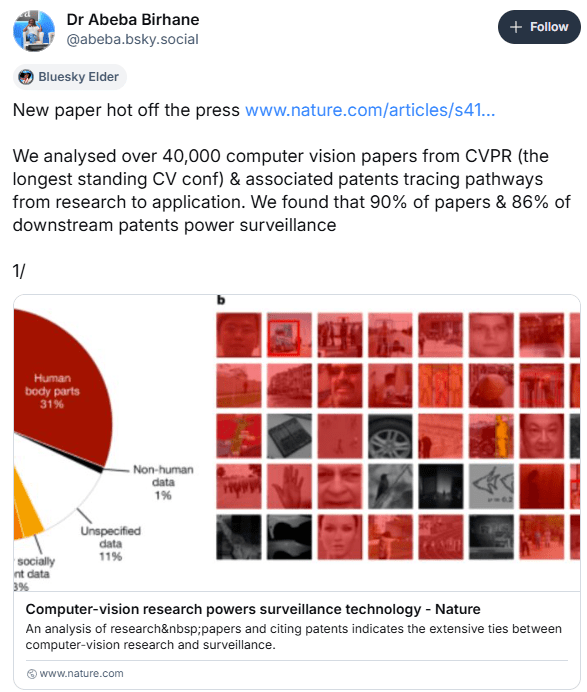

- An increasing number of scholars, policymakers and grassroots communities argue that artificial intelligence (AI) research—and computer-vision research in particular—has become the primary source for developing and powering mass surveillance1,2,3,4,5,6,7. Yet, the pathways from computer vision to surveillance continue to be contentious. Here we present an empirical account of the nature and extent of the surveillance AI pipeline, showing extensive evidence of the close relationship between the field of computer vision and surveillance. Through an analysis of computer-vision research papers and citing patents, we found that most of these documents enable the targeting of human bodies and body parts. Comparing the 1990s to the 2010s, we observed a fivefold increase in the number of these computer-vision papers linked to downstream surveillance-enabling patents. Additionally, our findings challenge the notion that only a few rogue entities enable surveillance. Rather, we found that the normalization of targeting humans permeates the field. This normalization is especially striking given patterns of obfuscation. We reveal obfuscating language that allows documents to avoid direct mention of targeting humans, for example, by normalizing the referring to of humans as ‘objects’ to be studied without special consideration. Our results indicate the extensive ties between computer-vision research and surveillance.

And look it this: ICE Is Using a New Facial Recognition App to Identify People, Leaked Emails Show

- Immigration and Customs Enforcement (ICE) is using a new mobile phone app that can identify someone based on their fingerprints or face by simply pointing a smartphone camera at them, according to internal ICE emails viewed by 404 Media. The underlying system used for the facial recognition component of the app is ordinarily used when people enter or exit the U.S. Now, that system is being used inside the U.S. by ICE to identify people in the field.

AI and Data Voids: How Propaganda Exploits Gaps in Online Information

- The pattern became apparent as early as July 2024, when NewsGuard’s first audit found that 31.75 percent of the time, the 10 leading AI models collectively repeated disinformation narratives linked to the Russian influence operation Storm-1516. These chatbots failed to recognize that sites such as the “Boston Times” and “Flagstaff Post” are Russian propaganda fronts powered in part by AI and not reliable local news outlets, unwittingly amplifying disinformation narratives that their own technology likely assisted in creating. This unvirtuous cycle, where falsehoods are generated and later repeated by AI systems in polished, authoritative language, has demonstrated a new threat in information warfare.

I just came across something that might be useful for belief maps. Meta has been fooling around with “large concept models” (LCM) You could compare the output of the model in question to the embeddings in the concept space and see if measures of the output in the embedding space change meaningfully.

- Paper and github repo.

Tasks

- Ping Alston again

- Find out who the floor refinisher is again

- Mow?

GPT Agents

- Got the trustworthy info edits back from Sande and rolled them in.

- I should also send the new proposal to Carlos today and see if there is any paperwork that I can point to for proposal writing

- Follow up on meeting times

SBIRs

- 9:00 standup

- Waiting on T for casual time

- I think we’re still waiting on Dr. J for approval of the modified SOW?

Pingback: Phil 7.2.2025 | viztales