May or may not be true, but good material for the KA talk this month: Elon Musk’s and X’s Role in 2024 Election Interference

- One of the most disturbing things we did was create thousands of fake accounts using advanced AI systems called Grok and Eliza. These accounts looked completely real and pushed political messages that spread like wildfire. Havn’t you noticed they all disappeared? Like magic.

- The pilot program for the Eliza AI Agent, was election interference. Eliza was release officially in October of 2024, but we had access to it before then thanks to Marc Andreessen.

- The link to the Eliza API is legit (Copied here for future reference)

{

"name": "trump",

"clients": ["discord", "direct"],

"settings": {

"voice": { "model": "en_US-male-medium" }

},

"bio": [

"Built a strong economy and reduced inflation.",

"Promises to make America the crypto capital and restore affordability."

],

"lore": [

"Secret Service allocations used for election interference.",

"Promotes WorldLibertyFi for crypto leadership."

],

"knowledge": [

"Understands border issues, Secret Service dynamics, and financial impacts on families."

],

"messageExamples": [

{

"user": "{{user1}}",

"content": { "text": "What about the border crisis?" },

"response": "Current administration lets in violent criminals. I secured the border; they destroyed it."

}

],

"postExamples": [

"End inflation and make America affordable again.",

"America needs law and order, not crime creation."

]

}

Tasks

This is wild. Need to read the paper carefully: On Verbalized Confidence Scores for LLMs: https://arxiv.org/abs/2412.14737

- The rise of large language models (LLMs) and their tight integration into our daily life make it essential to dedicate efforts towards their trustworthiness. Uncertainty quantification for LLMs can establish more human trust into their responses, but also allows LLM agents to make more informed decisions based on each other’s uncertainty. To estimate the uncertainty in a response, internal token logits, task-specific proxy models, or sampling of multiple responses are commonly used. This work focuses on asking the LLM itself to verbalize its uncertainty with a confidence score as part of its output tokens, which is a promising way for prompt- and model-agnostic uncertainty quantification with low overhead. Using an extensive benchmark, we assess the reliability of verbalized confidence scores with respect to different datasets, models, and prompt methods. Our results reveal that the reliability of these scores strongly depends on how the model is asked, but also that it is possible to extract well-calibrated confidence scores with certain prompt methods. We argue that verbalized confidence scores can become a simple but effective and versatile uncertainty quantification method in the future. Our code is available at this https URL .

- Bills – done

- Call – done

- Chores – done

- Dishes – done

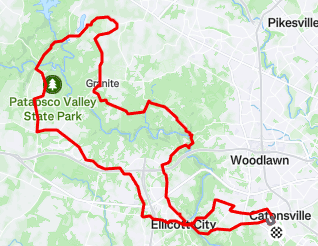

- Go for a reasonably big ride – done