Winter solstice! Tomorrow will be one second longer!

Scaling test-time compute – a Hugging Face Space by HuggingFaceH4

- Over the last few years, the scaling of train-time compute has dominated the progress of large language models (LLMs). Although this paradigm has proven to be remarkably effective, the resources needed to pretrain ever larger models are becoming prohibitively expensive, with billion-dollar clusters already on the horizon. This trend has sparked significant interest in a complementary approach: test-time compute scaling. Rather than relying on ever-larger pretraining budgets, test-time methods use dynamic inference strategies that allow models to “think longer” on harder problems. A prominent example is OpenAI’s o1 model, which shows consistent improvement on difficult math problems as one increases the amount of test-time compute:

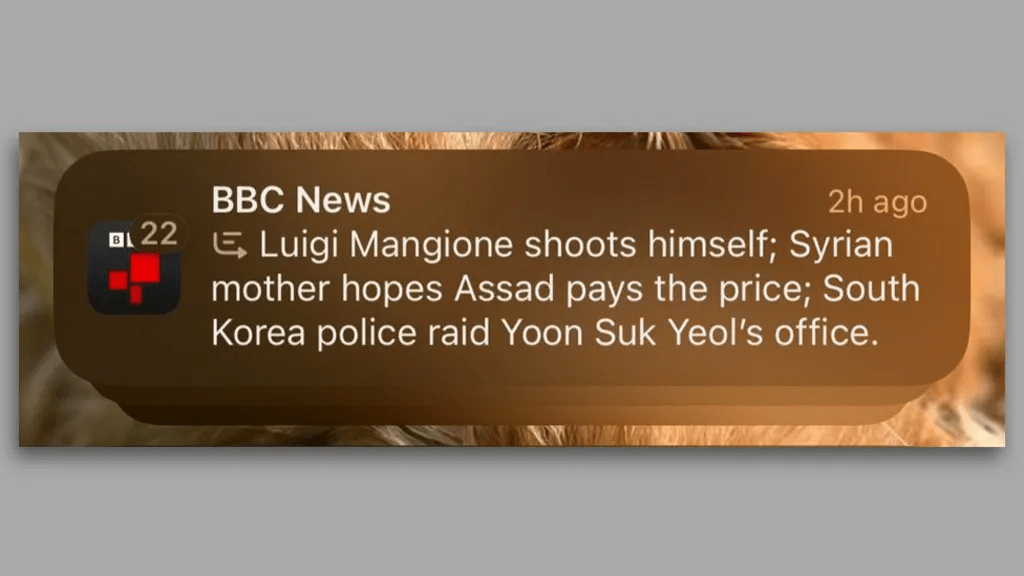

Another crazy AI slop thing: BBC complains to Apple over misleading shooting headline

- Apple Intelligence, launched in the UK earlier this week, uses artificial intelligence (AI) to summarise and group together notifications. This week, the AI-powered summary falsely made it appear BBC News had published an article claiming Luigi Mangione, the man arrested following the murder of healthcare insurance CEO Brian Thompson in New York, had shot himself. He has not.

The unbearable slowness of being: Why do we live at 10 bits/s? (ArXiv link)

- This article is about the neural conundrum behind the slowness of human behavior. The information throughput of a human being is about 10 bits/s. In comparison, our sensory systems gather data at ∼1,000,000,000 bits/s. The stark contrast between these numbers remains unexplained and touches on fundamental aspects of brain function: what neural substrate sets this speed limit on the pace of our existence? Why does the brain need billions of neurons to process 10 bits/s? Why can we only think about one thing at a time? The brain seems to operate in two distinct modes: the “outer” brain handles fast high-dimensional sensory and motor signals, whereas the “inner” brain processes the reduced few bits needed to control behavior. Plausible explanations exist for the large neuron numbers in the outer brain, but not for the inner brain, and we propose new research directions to remedy this.

GPT Agents

- Worked some more on the “for profit” diagram. Need to start on the “egalitarian” diagram.

Tasks

- Drained the washing machine, so hopefully that will help. On the ride today, Ross suggested that I put the machines in the garage. “That’s silly,” I think. “The garage is full of bikes and stuff from the basement!”

- But bikes weigh less than washing machines and are far less likely to mess up a floor that may be soft in places. So I brought some of the bikes back into the basement and muscled (washers are heavy!) the washer and dryer into the garage, where they can sit out the cold snap:

- Laundry! Bring soap! Done!