Got my COVID shot!

Plumber – not done

SBIRs

- Expense report! Done

- Lots of driving and meetings all day

- Research council went well. Good questions with an involved audience

- M30 meeting. Late because I got hung up at the gate. Good discussion though. I think there are several phases in roughly this order (put these in an Overleaf project):

- RCSNN hierarchy for both systems, varying only by the bottom layers. Top layers could be LLM-driven, which would be fun to evaluate. Probably a lot of context prompting and a GPT-3.5 back end?

- Simulator acceleration. There is never enough data to explore outlier states, so adding SimAccel autoencoding -> autoregression would increase the data available for effective training. Because all simulators are base on implicit assumptions, this data will almost certainly be wrong, which will be addressed with…

- Simulator cleaning. Like data cleaning, but for data generators. The quality of the generated data can potentially be evaluated by the way that the trained model has “behavior attractors” that can be identified through something like salience analysis. These would be examples of bias, either intentional or unintentional, Imagine that a car simulator that is extended to airplanes. The choice to use Euler angles (rather than Quaternions) for orientation – something that makes sense for a vehicle that navigates in 2D, will completely screw up an airplane doing basic fighter maneuvers such as an Immelmann, Split S, or wingover maneuver. The inability to produce that kind of data would produce artifacts in the model that could either be identified on their own or when compared with other models (e.g. MAST vs. NGTS).

- Coevolution of AI and Simulators towards the goal of useful models. Each iteration of training and Simulator cleaning will have impacts on the understanding of the system as a whole. Consideration of this iterative development needs to be part of the process.

- System trust. As the AI/ML simulator becomes better, the pressure to deploy it prematurely will increase. To counter this, the UI that is purposefully “low fidelity” or “wireframed” should be used for demonstrations and recordings to indicate the level of progress in the capability of the system.

- Get the GPT-2 layer display first pass working

- Start slides?

GPT Agents

- Clear and test ContextApp one last time before going live! Done

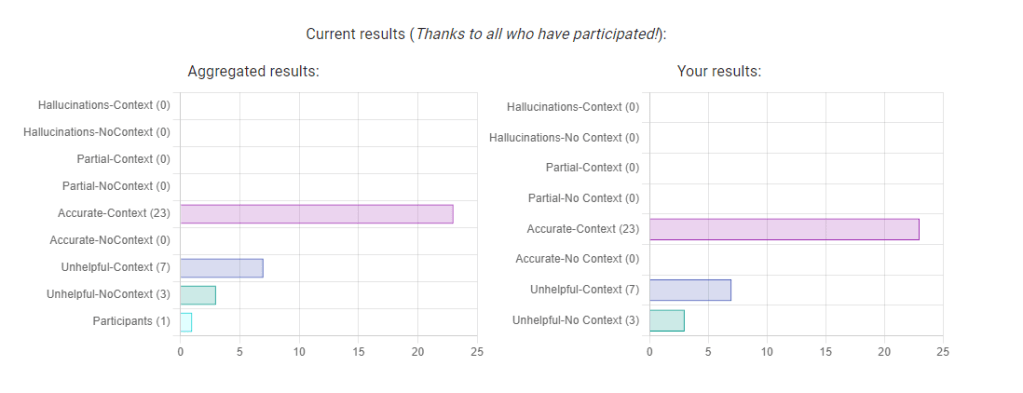

- First official result!

- Set up Box account – not done

- Did finish my first review for IUI 2024