PsyArXiv Preprints | Sycophantic AI increases attitude extremity and overconfidence

- AI chatbots have been shown to be successful tools for persuasion. However, people may prefer to use chatbots that validate, rather than challenge, their pre-existing beliefs. This preference for “sycophantic” (or overly agreeable and validating) chatbots may entrench beliefs and make it challenging to deploy AI systems that open people up to new perspectives. Across three experiments (n = 3,285) involving four political topics and four large language models, we found that people consistently preferred and chose to interact with sycophantic AI models over disagreeable chatbots that challenged their beliefs. Brief conversations with sycophantic chatbots increased attitude extremity and certainty, whereas disagreeable chatbots decreased attitude extremity and certainty. Sycophantic chatbots also inflated people’s perception that they are “better than average” on a number of desirable traits (e.g., intelligence, empathy). Furthermore, people viewed sycophantic chatbots as unbiased, but viewed disagreeable chatbots as highly biased. Sycophantic chatbots’ impact on attitude extremity and certainty was driven by a one-sided presentation of facts, whereas their impact on enjoyment was driven by validation. Altogether, these results suggest that people’s preference for and blindness to sycophantic AI may risk creating AI “echo chambers” that increase attitude extremity and overconfidence.

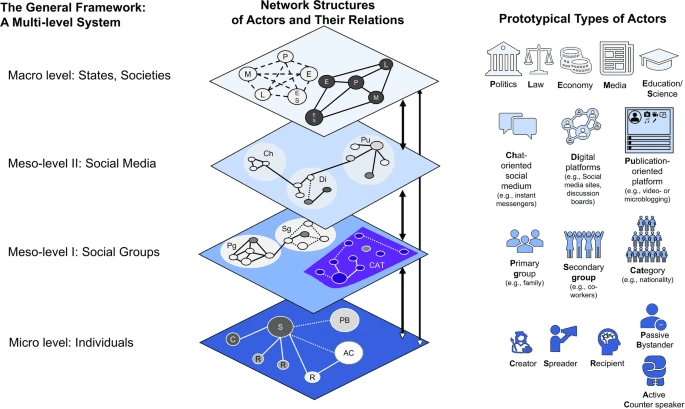

The complexity of misinformation extends beyond virus and warfare analogies | npj Complexity

- Debates about misinformation and countermeasures are often driven by dramatic analogies, such as “infodemic” or “information warfare”. While useful shortcuts to interference, these analogies obscure the complex system through which misinformation propagates, leaving perceptual gaps where solutions lie unseen. We present a new framework of the complex multilevel system through which misinformation propagates and show how popular analogies fail to account for this complexity. We discuss implications for policy making and future research.

- This is quite good. It shows how attacks work at different levels, from Individual, through social groups, social media, and States/Societies. It would be good to add to the current article or to the KA book

Why Misinformation Must Not Be Ignored

- Recent academic debate has seen the emergence of the claim that misinformation is not a significant societal problem. We argue that the arguments used to support this minimizing position are flawed, particularly if interpreted (e.g., by policymakers or the public) as suggesting that misinformation can be safely ignored. Here, we rebut the two main claims, namely that misinformation is not of substantive concern (a) due to its low incidence and (b) because it has no causal influence on notable political or behavioral outcomes. Through a critical review of the current literature, we demonstrate that (a) the prevalence of misinformation is nonnegligible if reasonably inclusive definitions are applied and that (b) misinformation has causal impacts on important beliefs and behaviors. Both scholars and policymakers should therefore continue to take misinformation seriously.

Tasks

- Bills – done

- Car registration – done

- Water plants – done

- Chores – done

- Dishes – done

- Storage run

SBIRs

- 2:00 IRAD meeting – not sure what we got out of that

LLMs

- More work on the article, need to fold in the sycophant chatbot paper – done!